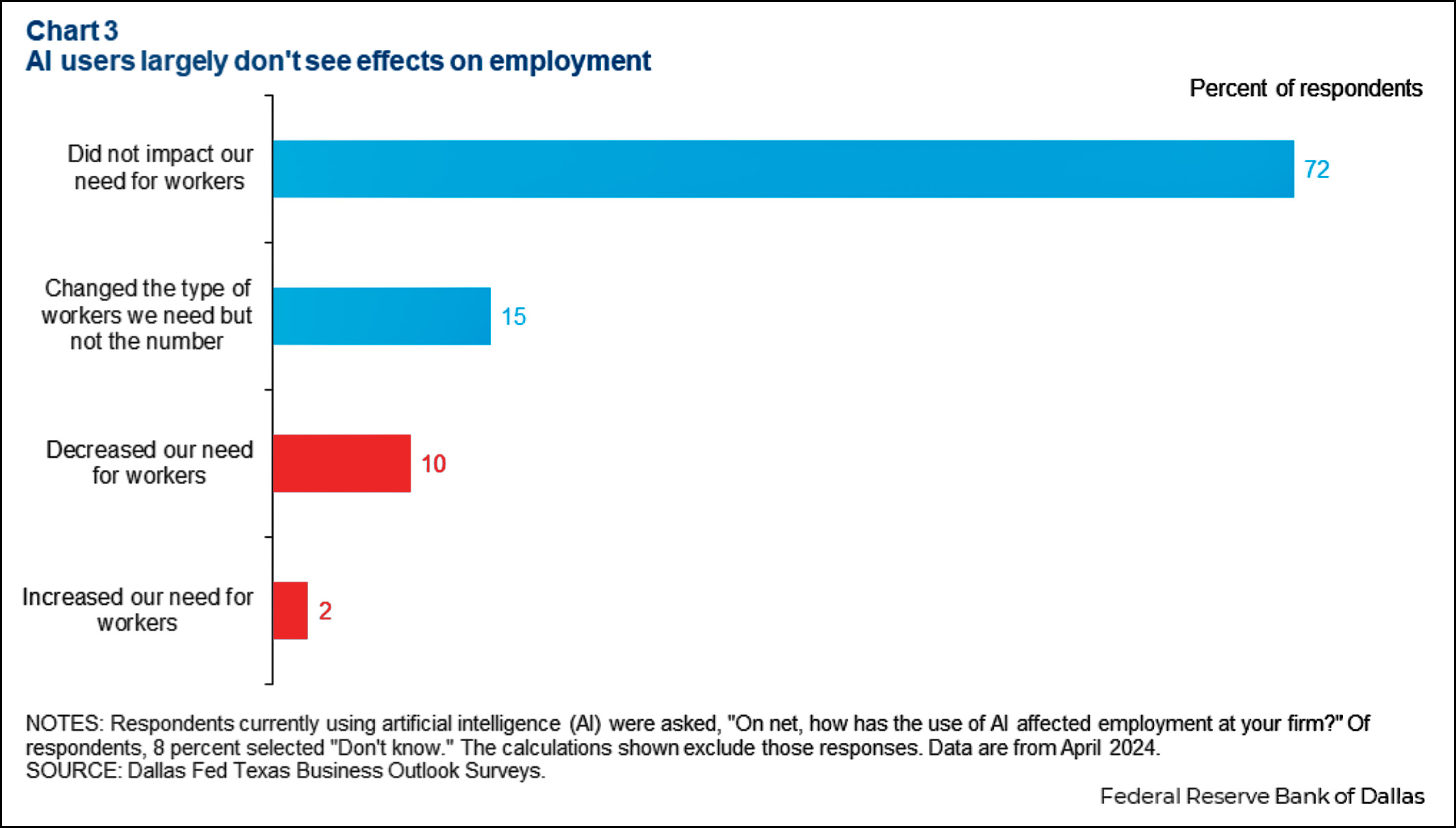

The Dallas Fed reports today that AI has so far had "minimal" effects on employment in Texas firms. Maybe. But first, take a look at their chart:

Among firms that use AI, that's a net of 8% of firms saying it's decreased their need for workers. That doesn't seem so minimal to me—especially for a technology that was effectively introduced only in late 2022. I wonder how many actual employees this represents?

Among firms that use AI, that's a net of 8% of firms saying it's decreased their need for workers. That doesn't seem so minimal to me—especially for a technology that was effectively introduced only in late 2022. I wonder how many actual employees this represents?

It's also instructive to listen to the anecdotal evidence:

Most companies using AI report it has not affected their need for workers. A financial services firm noted, “AI is helpful in offloading workload and increasing productivity, but we are not at the point where AI is going to replace workers.”

Ten percent of companies using AI say it reduced their need for employees; these firms were largely using AI for customer service and process automation. A manufacturer noted, “There is strong potential for AI to automate or eliminate many clerical jobs in our business that will save our team time and money.” Workforce reductions from AI use are notably more common among large firms than small ones.

If AI is offloading workload, then it's going to replace workers pretty shortly. And if there's "strong potential" to eliminate clerical jobs, then those jobs are going away.

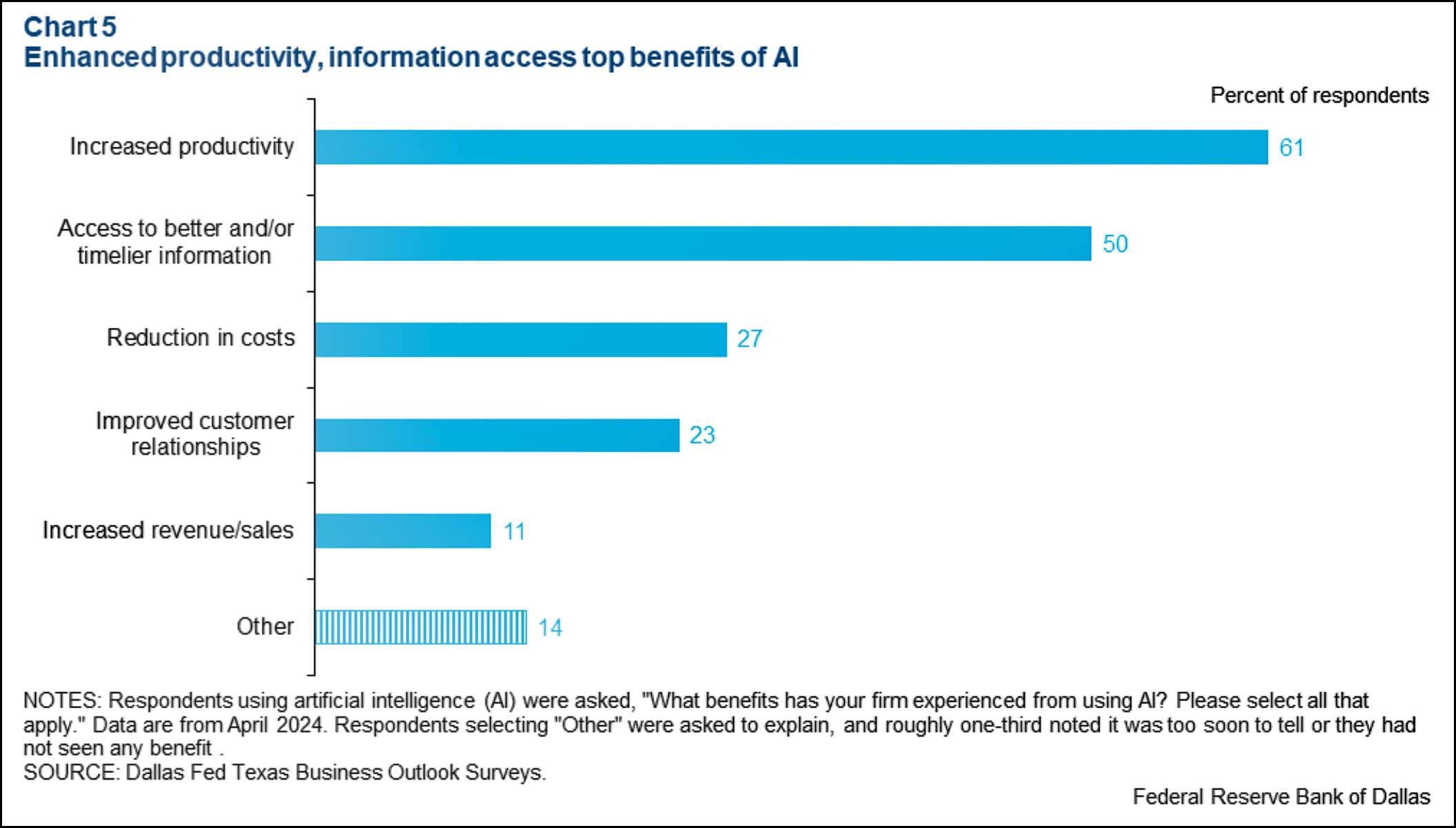

About 20% of all firms surveyed say they use generative AI (Copilot, GPT-4, Claude, etc.). That's a helluva lot for a technology that's only been around in usable form for two years. And the firms using it sure seem happy with the results:

I'll concede that findings like this aren't always reliable. Whoever fills out the survey is guessing at this stuff and might not be guessing correctly. Still, even if some of the AI use is sketchy (Copilot to help write emails, for example), it sure seems to have gained adoption remarkably quickly.

I'll concede that findings like this aren't always reliable. Whoever fills out the survey is guessing at this stuff and might not be guessing correctly. Still, even if some of the AI use is sketchy (Copilot to help write emails, for example), it sure seems to have gained adoption remarkably quickly.

We have plenty of employees using it as a research tool and some for image generation, but we don't yet see the need to pay Microsoft an additional $30 per employee per month. We are making employees aware that they cannot put corporate data into cloud AI portals for analysis.

I think forward facing customer service webchat employees are threatened a bit at other companies but we don't have that and are very technical and need humans for the complexity of most tasks.

Regarding using it AI as research tool. A group of us STEMmies have been testing the various LLM AI's with sets of questions, and they cough up a significant amount of bullshit (err, hallucinations). When I ask for biochem and virology references to back up the answers to various questions, roughly a quarter of the references are either partially wrong or totally made up (at least when I search for them in Google Scholar). One of the other testers is a physical chemist. The LLMs can handle basic chemical reactions, but when they get complex all the LLMs start making stuff up. And a non-STEMmy etymologist I communicate with gets about 90 percent failure rates for the origins of English words from the lists he gives them (you'd think they'd have sucked up the OED into the training data, but maybe they didn't want to pay the hefty subscription?). I wish we had a lawyer on team, because I'd be curious if they output bullshit caselaw references.

Anyway, double-check any of the info these LLMs give you!

Yes, public AIs output hallucinated caselaw references. https://www.reuters.com/legal/new-york-lawyers-sanctioned-using-fake-chatgpt-cases-legal-brief-2023-06-22/

They got fined for it.

My company would be an ideal customer for AI (lots of rebate processing) but we're not touching it due to privacy concerns. The entire company is under AI lockdown, we can't even use tools like Otter.AI to notetake during meetings. My husband's Aerospace company is the same.

Anyone who's offloading jobs to AI is doing so with an in-house private bespoke system that doesn't talk to anything else. Nobody wants AI learning their business strategy, plans, and competitive advantages.

I use ChatGPT at work regularly, and it's made me much more productive.

I'm a senior software engineer, and for simple tasks I can tell ChatGPT "write me some code that will load this, turn it into that, and and write it out again." Saves me an hour or two of tedium. But the kicker is, it's often not quite right and without 40-some years' experience with this crap I would have a hard time finding the errors. When I retire (soon, but not soon enough) Chat GPT won't be able to take my place. In the long run, the company would do better to hire an entry-level coder to do these routine tasks, so they can learn from their mistakes and be better prepared to take over my job.

I would hate to be a 30-year-old coder these days, but I'd also hate to be a large company when all the senior software engineers are retired.

See my reply to RonP above. We have similar experiences as you with other subjects.

As a retired software engineer I just don't see the advantage in specifying the problem in English as opposed to specifying it in code where I am more fluent. (and English is my first language)

Some difference between "replacing" people and jobs--wouldn't the likely pattern be that as AI is incorporated into operations, the need for new hires is lessened and the likely response to quits and retirements is a restructuring of work among existing employees. So a slowdown in overall hiring, as opposed to firing employees.

Best I can tell both Chart 3 and Chart 5 are about any kind of AI — neither chart is restricted to only Generative AI. See this link for the questions themselves: https://www.dallasfed.org/research/surveys/tbos/2024/2404q

Key detail is where it says in Question 3 NOTES: "Respondents selecting any version of "Yes" in question 1 were asked..."

And, these days nearly 100% of firms are using Traditional AI, though it's likely to be so well integrated into automated processes that most respondents would have no reason to realize if the IT they use every day fits the definition provided in the Fed's question.

I'm going to say that (a) I don't believe them, and (b) any company using this kind of AI extensively is degrading their own production/services. The only way AI can replace, say, email writing, is if the company was already terrible at writing emails.

I would also hazard a guess that saying "AI" adoption is making us able to slim down" sounds cooler than "we're laying off the losers to boost our bottom line in time for the next bonus cycle"

I don't think so. AI, for the most part, remains in the feature category as opposed to the product category.. IOW, it's a tool that helps increase productivity and not much else beyond that except for niche applications beyond LLMs.

Even if GPT5 is indistinguishable to a living human, it remains limited to I/O operations as opposed to independently functioning with limited support, and it still needs to be checked for errors.

Interesting read here ... I should have included this with my original comment.

https://stackoverflow.blog/2024/06/10/generative-ai-is-not-going-to-build-your-engineering-team-for-you/

These types of surveys are never reliable as the respondents are in fact guessing. There is also the issue that nobody wants to admit that they spent a boatload of money on stuff that ended up producing no real advantage. At best, in the near term, AI will turn almost nonexistent customer service to bad customer service.

I called to make an appointment which was automated at a car dealer for auto maintenance yesterday. While the program paused to look up information or record the time and date for the appointment sounds of typing on a keyboard were provided to me as if the voice 'talking' to me was a person and making the inputs. An interesting embellishment.

So far, where I work, it’s a big zero impact.

"Among firms that use AI, that's a net of 8% of firms saying it's decreased their need for workers. "

No it isn't. It is a net of 79% saying it either didn't affect their need or increased their need for workers.

We are not hearing about major layoffs except at a handful of firms that are facing bad financial times (like Walgreens today saying they will be closing a bunch of stores). So far it would seem that AI is supplementing human workers not replacing them.

Yep a relative of mine was on a contractor team that designed store layouts as the seasons changed and for new stores for Walgreens. They got laid off in January. It's a shame they need a few more years of frugal living before they could afford to retire but now I fear nobody will hire them as they can't drive and are 60 something.

Not so fast!

Every algorithm that comes online now is called "AI" by the sales people. It looks like the "AI-revolution" occurred instantly. All of a sudden everything is called "AI" that last year would have been called "algorithm" or "software" or "App".

Just because something is called "AI" does not mean it is.

As far as I know there isn't even a proper, generally accepted definition of "intelligence", far less of "AI". Which means it is open season for hype no matter how outrageous.

I can't help adding this: If all the money that went into "AI" had been invested in a "smart" electric grid we would have one now--with significant benefits for the climate. Silicon valley folks have a special talent for wasting resources on pointless projects.

AI is just what we don't know how to do yet. Playing chess used to take intelligence, but after Deep Blue beat Kasparov, it was min-max optimization with pruning, not AI any more. Expert Systems were AI, but when they didn't work out so well they weren't. Are Machine Learning, Large Language Models, and Stable Diffusion algorithms AI? They are at the moment, but pretty soon their limitations will become more well known, and then they won't be.