Shit is getting real.

A few days ago the OpenAI Foundation released ChatGPT, a version of their AI language engine that's been trained to have natural conversations with people. It has stunned nearly everyone who's used it:

Still blows my mind there’s zero mainstream coverage of the only thing everyone in tech is talking about

It’s like we just split the atom and everyone is talking about football

— Oliver Emberton (@oliveremberton) December 4, 2022

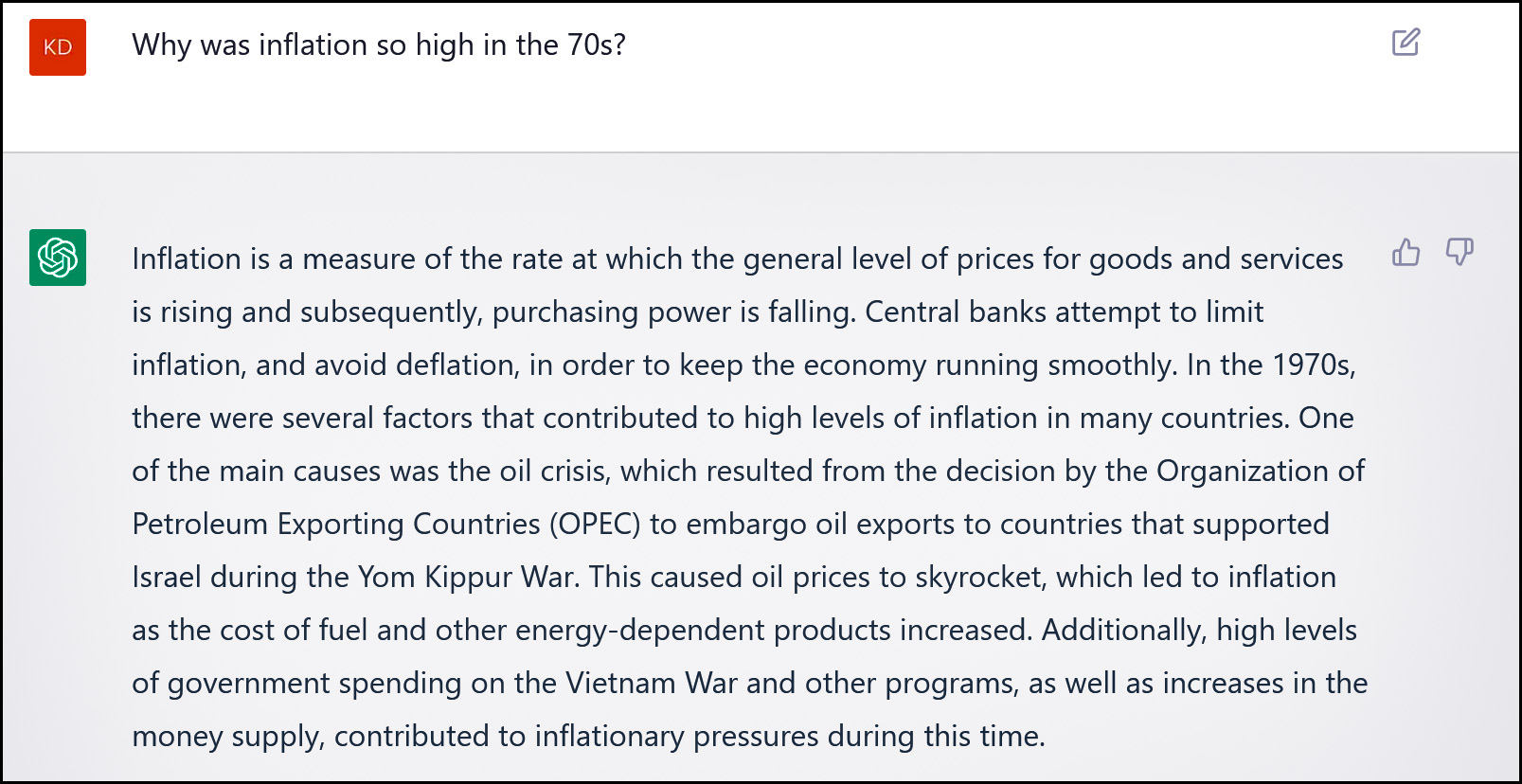

Here's a sample from my first conversation:

High marks! It could have added something about wage-price spirals, but it was probably best not to. So high marks for judgment too.

High marks! It could have added something about wage-price spirals, but it was probably best not to. So high marks for judgment too.

(My original version of this question was about inflation today, but ChatGPT informed me that it was not connected to the internet and its knowledge cutoff is 2021.)

ChatGPT is capable of far more than my little question suggests, and does a creditable job of carrying on a conversation. If you try this, you'll probably be impressed but left thinking that it's not really all that bright. And you're right:

IQ of ChatGPT is 83.

It corresponds to low average.

Here is where it failed🧵1/11 pic.twitter.com/Lgm3muCTiR

— Sergey Ivanov (@SergeyI49013776) December 1, 2022

It's best not to take this too seriously, but an IQ of 83—or anything in the vicinity—is spectacular. Why? Because it means that language models with an IQ of 100 or 150 aren't far away. On that note, it's worth reading the whole thread to see what ChatGPT's shortfalls are. They may seem fairly humorous at first, but if you read them in the context of an IQ of 83, they're suddenly far more understandable.

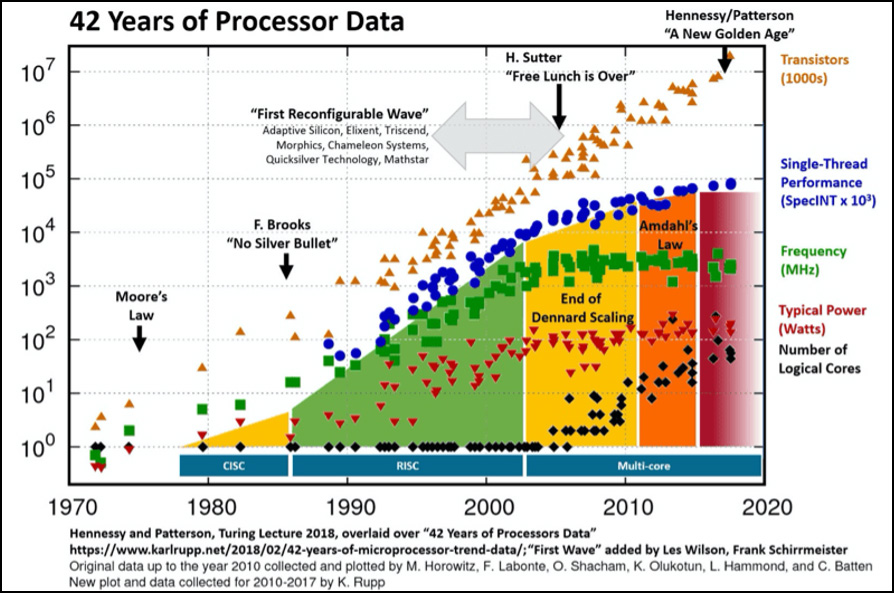

The progress of large language models is due to advances in both software and hardware. In particular, the GPU revolution has kept hardware processing power on an upward path long after the naive version of Moore's Law ran out of steam.

None of this means that artificial intelligence is here. It's not. Nor does it mean that millions of people are going to lose their jobs in the immediate future. But AI progress continues to be stunning, and the greatest progress is likely to be in tasks that are purely cognitive and don't require much, if any, interaction with the physical world—which turns out to be quite difficult.

None of this means that artificial intelligence is here. It's not. Nor does it mean that millions of people are going to lose their jobs in the immediate future. But AI progress continues to be stunning, and the greatest progress is likely to be in tasks that are purely cognitive and don't require much, if any, interaction with the physical world—which turns out to be quite difficult.

But all of us wordslingers? We'd better watch out.

That reads like a REPORT and not a conversation. No one in real life converses like that. They lecture like that.

My cousin could truly receive money in their spare time on their laptop. their best friend had been doing this 4 only about 12 months and by now cleared the debt. in their mini mansion and bought a great Car.

That is what we do.. https://profitguru9.blogspot.com/

I hear your cousin's been having marriage issues. Her husband keeps talking about his disinterest in having unprotected sex -- that's weird isn't it? I mean, didn't you tell me that she was on the pill? What's up with that?

Exactly. No thought. The AI spit back programmed data. The result was nothing more than an encyclopedia entry.

My cousin could truly receive money in their spare time on their laptop. their best friend had been doing this 4 only about 12 months and by now cleared the debt. in their mini mansion and bought a great Car.

That is what we do—————————➤ https://easyprofit24.netlify.app

I remain skeptical. Humans are good at attributing volition and feelings to objects that even vaguely resemble living and thinking beings. Conversation simulators, even fairly simple ones, have been fooling people into thinking they are conversing with real humans for decades. That particular answer sounds kind of like a Wikipedia article, so by itself it's not all that impressive.

Also, as usual, we need to define what we mean by "artificial intelligence" if we are going to talk about whether and when this will be achieved.

What are you skeptical about, the philosophical question, or that the robots are coming for your job?

The former is a ton of fun to think about, but doesn't have much effect on the latter, which is not fun to think about.

I'm skeptical that anything that could reasonably called "artificial intelligence" is "getting damn close"

OK. I agree that is fun to think about.

It'll give us something to do while applying for unemployment.

Secretaries did more than just type up memo's.

That didn't prevent the end of the steno pool...

Like EVERY DAMN TRANSITION IN HISTORY the change will not be that on Jan 1st 2026 every "writer" in America is fired. Rather it will be that one small use case after another will find that either an existing writer can be more productive (so that a second writer need not be hired) or the ultimate user of the written copy can do the job themself ("write up their own email") and so does not need to hire a writer.

It took what, maybe 40 years (1970 to 2010?) for secretaries to become essentially obsolete. In 1970 it's rare, idiosyncratic individual managers, by 2000 the only secretaries left are those attached to old managers about to retire.

BUT we also get a new type of job, the Admin Assistant, which is not just typing dictation but other sorts of work...

Ask it emotionally charged questions (I'm not opening an account with an AI company...or should I?).

I don’t see how anybody can name ChatGPT an AI, especially an AGI at that. I’ve seen a few of its works and while the prose is acceptable, the basic problem is that it simply doesn’t understand. It’s able to connect certain concepts and topics because it has learned to follow the underlying rules. Well, that’s quite an achievement, I guess.

But while it can spin pretty explanations or lectures, nothing it says is filled with understanding. It writes words without meaning because it is completely unable to understand: What means being poor to an neuronal network? Or the activity „swimming“? Or, for that matter, anything else?

Still, it’s an amazing achievement. But the foremost problem seems to me the claim of „intelligence“, i.e. cognition. No matter how sophisticated these so-called AIs may be, none of them has the ability of cognition. I don’t even know if this would be the case if they could pass a Turing test. After all a Turing test only proves that a machine could deceive some human, not that it understands a single word or concept of what it says.

Commercial art, call-center customer support, and relatively simple line-of-business programming tasks all became tasks robots can do this year.

Lawyers will slow adaption down, but I'm willing to bet a specialization of this tool could handle the bottom half of legal advice by complexity.

What's on the list for next year?

I have never had a problem solved by the "AI" automatic voice part of the call center routing. Usually, I end up saying over and over, "talk to a human being," and then after some time, the "AI" gives up and routes me to a human being who can solve my problem. The automatic messaging system works only for rote information such as addresses and account balances. Could you color me unimpressed? I am also still waiting for fast food personnel to be replaced by robots. I keep hearing about it but never see it.

> also still waiting for fast food personnel to be replaced by robots. I keep hearing about it but never see it.

That's simple economics. Two facets:

- The robots still cost more than $~17/hr to run.

- The cashiers have a loss-prevention/store maintenance function. Not that they stop things, but having a human around stops some number of people from doing stupid shit, which has value.

Together, that means humans still have a role for now.

I earn $162/hour telecommuting. I never would have imagined this to be honest, but my closest female companion is making $21,000 a month working on the web, it was really shocking to me, she prescribed me to just try it,

COPY AND OPEN THIS LINK........

There are already entire AI-bots that will write news articles -- I mentioned this the other month -- and now there is at least one AI-bot that will write long-form term papers.

The industry most at-risk is local journalism, then the term paper services of poor smart folks. Eventually, blogs will be full of AI-bots.

You made an interesting observation which is a bit frightening. I hope I am in the ground before humans lose the ability to write. But it would be nice if this blog had an AI bot to block the SPAM.

Why?

Where do you imagine is the human demand for endless streams of AI-written articles?

These things exist today as SEO parasites; their reason for existence is not to be read, it's to be landed on via a google search, and so to display ads. But the same technology that can generate these pages can also see that they are garbage and not recommend them.

In other words they are a temporary phenomenon, or a chronic background infection; but they are not the primary longterm story.

This: https://knowledge.wharton.upenn.edu/article/ai-in-journalism/

I earn $162/hour telecommuting. I never would have imagined this to be honest, but my closest female companion is making $21,000 a month working on the web, it was really shocking to me, she prescribed me to just try it,

COPY AND OPEN THIS LINK....... https://tinyurl.com/4whcycxw

MMMM?? Elizabeth uses ChatGPT to write her posts????

When does AI take over the Federal Open Market Committee?

Having made several negative comments about the usefulness of AI, I am compelled to say that Grammarly is an extremely helpful AI.

If Kevin could show that the excerpt about inflation was something that the robot or computer had come up with itself by living through the 70's or even by reading about it, he would have some proof of actual AI. But I suspect that this is just boilerplate inserted by a programmer, which comes up when triggered by a question about inflation. Kevin's question was a pretty feeble test.

So far I have seen little evidence of actual learning by "robots". What has certainly occurred is more and more elaborate programming involving explicit reaction to specific inputs. "Self-driving" cars are obviously not learning, they are being fed increasingly elaborate coding - it is the programmers who are learning. But computers don't forget, so once a solution is programmed it will always work and not be ignored when the driver is asleep or drunk or talking on a phone.

Whether you call this kind of thing programming or AI, more use should be make of it in medicine. There is a huge amount of information that medical diagnosticians must assimilate, and this should be a continual process as new information becomes available. Computers can store all the relevant information, and they can be programmed to make diagnoses. What still happens now is that physicians must be individually programmed by a long and expensive process - every single one must go through this process. The kind of information that they must acquire and the algorithms that they use can be set up once and for all and transferred instantly between computers. Of course as in the case of robot cars there will be a long process of refinement of the algorithms, which at least now is done by programmers, not the robots themselves.

"So far I have seen little evidence of actual learning by "robots". What has certainly occurred is more and more elaborate programming involving explicit reaction to specific inputs. "Self-driving" cars are obviously not learning, they are being fed increasingly elaborate coding - it is the programmers who are learning."

This is absurd, and reflects a complete ignorance of how these systems work. No one programmed in the answer to Kevin's question, and no one is programming in the location of every stop sign for autonomous vehicles.

Google "machine learning" if you'd like to be educated on the topic. Wikipedia has a reasonably good explanation: https://en.wikipedia.org/wiki/Machine_learning

Or better yet...try it out. Sign up for it:

https://chat.openai.com/chat

I asked it to code an Angry Birds game in Java. It wrote out java code better than many Comp Sci graduates, including encoding the physics of a parabola in code. And it did it instantly. I'm trying to figure out how this is possible if it doesn't understand physics, and computer science, and I'm sorry but an IQ of 83 is truly not accurate. Most high school and college grads do not understand how these formulas work well enough to write them out in code.

I am going to spend some time thinking about exactly what my college degree is for. Meanwhile, the AI revolution is upon us, and I am truly scared.

I am sorry to say that you don't appear to understand anything about how ChatGPT or these large language models work.

There is no boilerplate. There is no elaborate programming. These things just do not work that way.

Sadly, I cannot point you at any good articles that explains how it does work appropriately. Call me next week, maybe.

GPT and the like are just Dissociated Press with a bigger corpus and more efficient implementation. Dissociated Press was an EMACS macro from back in the 1970s that took a buffer of text and a starting phrase from that text. It then generated as much text as you could endure that was basically an semi-coherent stream based on the text in the original buffer. Basically, GPT uses an efficient compression algorithm to store a massive corpus of text and generates text the same way. Machine learning gets a really impressive compression ratio, but it isn't a breakthrough in understanding. Clever Hans had no idea of how addition worked, and GPT has no idea of what it is saying.

P.S. The last FORTRAN program Nicholas Negroponte ever wrote was a clever doodling program back in the 1970s. You doodle on the tablet, then it took over and kept doodling in your style. It was pretty amazing to watch, so I understand why similar demos that can store a much larger image database are impressive as well.

> "Clever Hans had no idea of how addition worked, and GPT has no idea of what it is saying"

Have you seen ChatGPT's responses when asked about this?

Worth considering that we also do not really have any idea of what we are saying most of the time....

This is really more about voice recognition technology than artificial intelligence. Isn't it? It's impressive as such. FWIW I just asked Google assistant the same question and it quickly quoted a web site called economicshelp.org that briefly summarized the response shown here by ChatGPT. It asked if I wanted "more context" but I didn't listen to it go on.

I agree that voice recognition tech has come a long way over the past 20 years (since it was available for use to anyone that wanted to mess with it back then). But the answer to a generic question about something that can be looked up on the internet hardly qualifies as AI.

There is ZERO voice recognition software in ChatGPT. It is a text based system, and cannot handle voice by itself. THat would be trivial to add, but the voice recognition part has nothing to do with the way that ChatGPT actually works.

Actually the quality of conversation has decline in the internet/social media age, but it's easy to ferret out independent minds, which AI obviously isn't.

That isn't intelligence at all. It is just statistical correlations. You can't treat that as a thing that understands what emerges.

Thought folks may like this

https://maxtaylor.dev/posts/2022/12/lisp-repl

How do you start conversing with an AI? Well, break the ice with some jokes. First I asked it to tell me a joke. It was funny (me being a nerd). Then I told it one and asked if it got it. It made valiant attempts but was pretty miserable at it. Finally, I asked if it understood a passage from Heinlein's Moon is a Harsh Mistress, in which Mannie (the "programmer") is explaining types of jokes to Mike (the AI). ChatGPT totally missed the point and got huffy when I said that being a computer I guess it got fooled too.

OTOH, it's pretty nice if you just want a souped-up search engine. Though again, it got huffy when I asked what were the sources of its answer, so maybe "search engine" is the wrong paradigm too.

I suspect the programming team has gone to great lengths to make sure nobody accuses it of sentience (it reiterates that theme often) - ELIZA was more personable over half a century ago!

The rest of the topic aside, Oliver Emberton is wrong: I've seen at least two articles in mainstream press. Kind of bugs me when people get all hyperbolic like that.