What. The. Fuck.

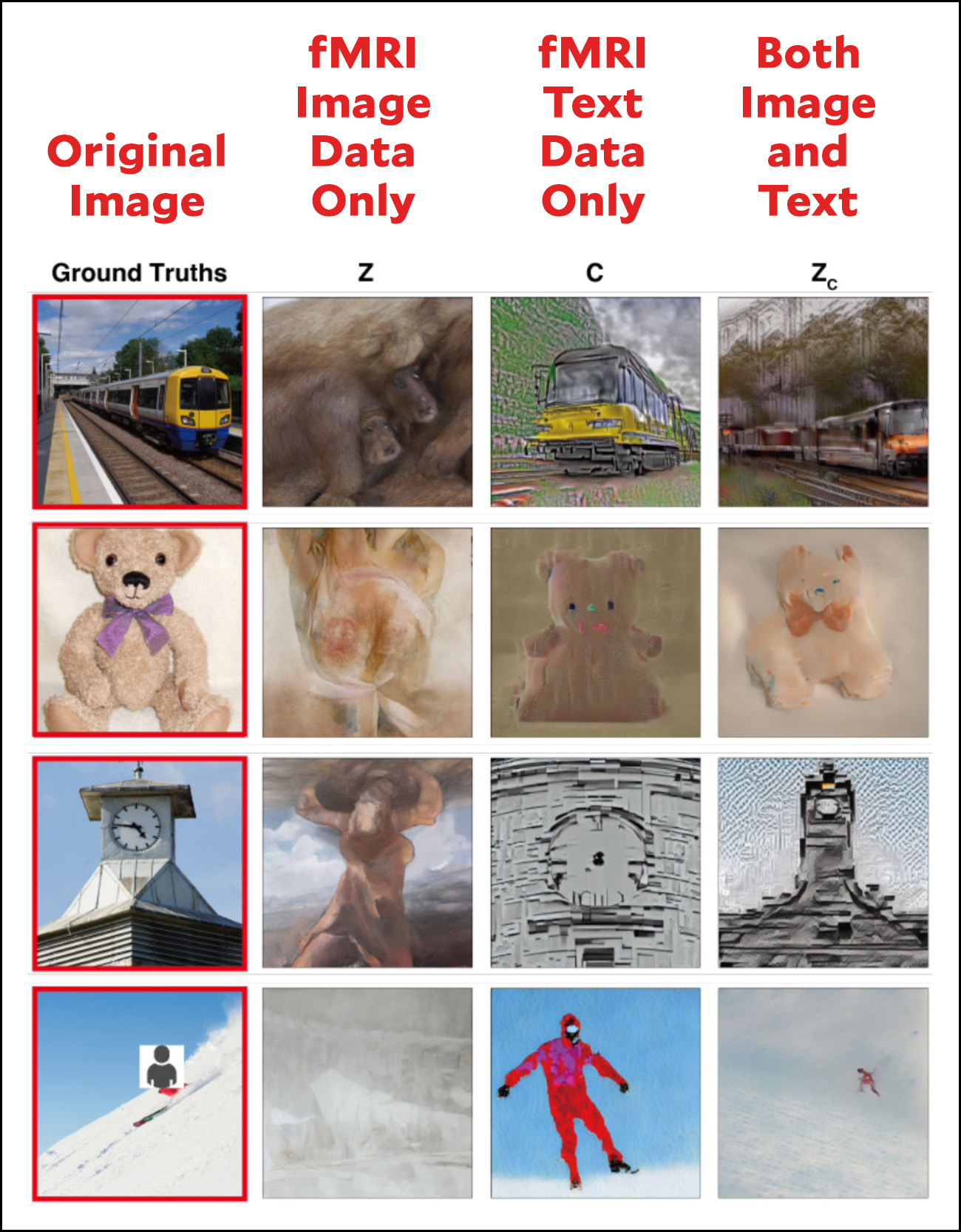

This is from a paper written in December by Yu Takagi and Shinji Nishimoto of the Graduate School of Frontier Biosciences in Osaka. Subjects were put in an MRI machine and shown a series of pictures. The fMRI data was collected, and then software using an algorithm called Stable Diffusion attempted to reconstruct the image. It did pretty well!

This is from a paper written in December by Yu Takagi and Shinji Nishimoto of the Graduate School of Frontier Biosciences in Osaka. Subjects were put in an MRI machine and shown a series of pictures. The fMRI data was collected, and then software using an algorithm called Stable Diffusion attempted to reconstruct the image. It did pretty well!

The algorithm uses data from both image and text signals in the fMRI data. Here's a sample of what the reconstructions look like using just the image or just the text data:

I gather from reading the paper that this is not a big deal. Researchers have been working on this stuff for years, and the particular innovation of the Stable Diffusion algorithm is that it works "without the need for any additional training and fine-tuning of complex deep-learning models."

I gather from reading the paper that this is not a big deal. Researchers have been working on this stuff for years, and the particular innovation of the Stable Diffusion algorithm is that it works "without the need for any additional training and fine-tuning of complex deep-learning models."

But I've never seen anything quite like this. I've seen vague blobs from fMRI reconstructions, but that's about it. I guess I haven't been keeping up.

This has possibilities that it's hard to even list. But go ahead and give it your best shot in comments. As always, the question is not so much what this technology can do right now, but what something already this good can do in a few years.

In the second set of images, is something up with Subject Z?

The second figure is all the same subject. Column Z is the image reconstructed using only one type of fMRI data. Column C is using another type of fMRI data, and Column Zc is using the combination of the two using the less computer intensive algorithm they are studying.

I misunderstood! Skimmed off too quickly, but, still is the first column the best they get from an image alone? When they showed him the train was he really strongly thinking of something else?

Basically it shows that the image alone doesn't get any idea of the "semantics" of the image (what it shows), only geometrical countours of it (and for the last one doesn't even get that).

I’m currently generating over $35,100 a month thanks to one small internet job, therefore I really like your work! I am aware that with a beginning cdx05 capital of $28,800, you are cdx02 presently making a sizeable quantity of money online....

.

.

Just open the link————->>> http://Www.Coins71.Com

Google paid 99 dollars an hour on the internet. Everything I did was basic Οnline w0rk from comfort at hΟme for 5-7 hours per day that I g0t from this office I f0und over the web and they paid me 100 dollars each hour. For more details

visit this article... https://createmaxwealth.blogspot.com

This is pretty cool/scary/amazing. In the future, your dreams will be recordable and we'll be able to definitively ascertain the brain-deadness of people in comas. But also, a legal battlefield awaits on many fronts. Will its use be deemed a privacy intrusion implicating 5A, even while corporations operate unrestrained? Will there be any restrictions of its use against enemy combatants? One imagines an entire cottage industry will pop up to provide you with false memories in order to hijack the use of fMRI. We're getting closer to Total Recall, for better or worse.

I assume SCOTUS will sign off on this technology being used against poor people and minorities, but definitely not against white people who have lots of money. (Like every other ruling they issue these days.)

Doubtful. The current SCOTUS has been very protective of people's rights with respect to GPS devices and cell phones, even though its holdings disproportionately benefit the poor and low end criminals. You can complain about a lot of things with SCOTUS, but they've been pretty good on the issue of new technology and the 4th Amendment.

One of the episodes on House, MD, demonstrated some similar technology 10 or so years back., Of course, the series was fiction, but the technology, such as it was at the time, was not.

My first impression when reading the headlines about this study was, "interesting lab experiment, but how useful is an application that requires the use of an MRI." But at this point, the scientists are trying to understand how the brain encodes images, and how that knowledge might be applied both to improve machine vision, and of course, future applications for cognitive research, not necessarily for "reading thoughts." Once you understand the encoding mechanisms, finding a simpler and more practical way to detect those signals may come next. Before then, this information could be immensely helpful to improving machine vision, which has difficulty interpreting real images with significant complexity.

This research is very exciting with a huge number of useful applications, and I hope that it avoids generating the type of hysteria that has accompanied ChatGPT and Microsoft's Sydney.

It's easy to think that in time they may be able to use this to identify the complete psychos who, all the neighbors say, was just a normal guy before they found thirty bodies in his basement, or others who are simply wrong for particular jobs.

I can imagine such tests becoming required in certain professions, especially those that require contact with people, like teachers or doctors, or involvement with sensitive information or circumstances, like investigators, financial managers and intelligence professionals.

So Brainstorm was a forward-looking documentary?

Can it read my mind when I think…. No. It cannot. Silly. What do you think I thought?

That is pretty stunning, but you keep making the same mistake -- assuming that since progress has been rapid so far it will continue to be so. The truth more likely is that progress has been rapid because the easy problems are the ones that have been falling. Now they have to tackle the tough ones and it is likely to slow down soon, just like self driving cars. Arguing that success is imminent because it is "already this good" is assuming that all the component problems of this larger problem are easy, that there is nothing hard to do.

I predict massive growth in the 2030s and 2040s for a job category called "MRI mind-reading blocking techniques coach."

Mike, great work. I appreciate your work since I presently make more than $36,000 a month from one straightforward internet business! I am aware that you are now making a good living online starting sb-05 with merely $29,000, and they are simple internet operational chores.

.

.

Just click the link————————————>>> https://works332.blogspot.com/

Where is Eve? Is she off today?

In case it is not obvious, they cannot "read" YOUR mind. The analysis and reconstruction is specific for each subject, based on data collected for this subject. So they can "read" only their subjects minds.

So this technology is "reading" what we perceive to be what we saw?

We "see" what we want to see and news organizations like Fox and MSNBC use this human frailty to their advantage already. Every pic of Joe Biden on Fox shows him falling or looking somewhat "plastic". On MSNBC their pics of Trump show him wild eyed. What would people think of Rudy G if the ONLY pic of him was him heavily perspiring his hair dye at a press conference?

What could this do on a blind person? Of course their brain wouldn't respond to a visual image. How would this MRi thing work on a blind person who is smelling something awful?

This is weird science IMHO

This is not a well written paper. I think there may be an interesting contribution, but it is hidden under many layers of attention grabbing image processing.

Stable diffusion is one of the well known Text to Image generation models. (https://en.wikipedia.org/wiki/Stable_Diffusion). Other similar very successful Text to Image generation models are Dall-E (https://en.wikipedia.org/wiki/DALL-E), and Midjourney (https://en.wikipedia.org/wiki/Midjourney).

Stable diffusion, DALL-E, and Midjourney are all amazing accomplishments, and have demonstrated that we've reached a point in technology where we can take a text prompt encoded as a vector, and generate an image with similar semantic content to the text prompt. The text prompt is encoded as a vector using a model called "CLIP". The following is a really good introduction: https://www.assemblyai.com/blog/how-dall-e-2-actually-works/

This is exactly (and only) what is happening in the "fMRI Text Data Only" column of the second image. The authors have taken a semantic vector, fed it to Stable Diffusion, and shown that (no surprise based on previous work by many other researchers) Stable Diffusion produces an image with the right semantic content.

The real question is "where did the semantic vector come from?" This is the contribution of the paper, and is only barely described (in Section 3.3):

> To construct models from fMRI to the components of LDM, we used L2-regularized linear regression, and all models were built on a per subject basis.

and in the conclusion:

> Unlike previous studies of image reconstruction, our method does not require training or fine-tuning of complex deep-learning models: it only requires simple linear mappings from fMRI to latent representations within LDMs.

That simple linear regression from the vector representing the fMRI to the vector representing the semantic content used as input to Stable Diffusion is able to do this successfully is kind of cool, and the part of the paper that I find interesting.

Note though that they say they are training on a "per-subject" basis, and I suspect they are perhaps overfitting. I wish the paper had focussed on a more complete description of the actual contribution.

Thanks for this great analysis.

I read the paper too and came to a similar conclusion. Your explanation of the text to image model makes me even more sure.

They imply--without actually stating it in so many words--that they reconstructed an image shown to a person from the person's MRI at the time they looked at the picture.

I don't know how good the resolution of the best MRI machines is. I would be surprised if it were even in the neighborhood of a single neuron given that MRI measures everything across a layer of bone. And anyway the fact that they are not clear about what exactly the experiment involved makes me suspicious. This makes me remember cold fusion.

Concerning resolution: Currently a pixel is in the region of 10**5 neurons. The temporal resolution is worse, because it relies on haemodynamic response, which is in seconds. So dynamics of thinking are out question.

"The real question is "where did the semantic vector come from?"

Yes. It seems clear that most of the image detail comes from the text data. How this is generated is completely mysterious to me. So I can't judge how significant this research is. Does the text data introduce information about the subject beyond what appears on the fMRI image?

Still fascinating. Thank you for your good analysis.

"Note though that they say they are training on a "per-subject" basis.."

That is necessarily true for all fMRI-based models, because at resolution below gross features of the cerebral cortex(~ 1 cm), humans brains differ, and you cannot project acivity in on cerebral cortex to another. It will also be true for any other technology that builds models based on activity at high resolution.

For this article, tou can see ity in the images that they give at the end of the appendix

https://www.biorxiv.org/content/biorxiv/early/2022/12/01/2022.11.18.517004/DC1/embed/media-1.pdf?download=true

The overall pattersmn are the same across individuals, but detai;ls are different.

As with computer generated words, where will the poets, song writers, and journalists go. A machine will do it all..... No more satisfaction in writing a great letter. Of course, today's kids don't write sentences.

Google paid 99 dollars an hour on the internet. Everything I did was basic Οnline w0rk from comfort at hΟme for 5-7 hours per day that I g0t from this office I f0und over the web and they paid me 100 dollars each hour. For more details visit this article... https://createmaxwealth.blogspot.com

You've been engrossed with AI lately. Have you read this? https://nymag.com/intelligencer/article/ai-artificial-intelligence-chatbots-emily-m-bender.html

Unfortunately, like every AI advancement, it will be used extensively to sell us more crap or addict us to something that will sell us more crap.

Shit ticket to paradise,

https://thehill.com/policy/energy-environment/3878052-study-toilet-paper-adds-to-forever-chemicals-in-wastewater/

I would be interested to know the correlation between the image quality generated by those undergoing fMRI and IQ. Also, might there be some mental strategies one could use to improve one's mental imagery. For example, as the text appears to greatly help in forming better mental images perhaps deliberately concentrating on a verbal description while in the MRI would help with image formation.

The application of fMRI to Alzheimer's could be a great clinical application. As AD advances patients lose the ability to speak yet one suspects that they still have the ability to form mental images. This could be a fantastic way to allow them to maintain some level of personal agency even as they progress into severe illness.

I would like to see a fully animated rendering of my dreams, most of which seem fascinating for about five seconds after I wake, but then are lost to me. However, I would not want Science to have access to a whole bunch of other stuff that goes on in my brain.