My previous post was about a New York Times lawsuit accusing Microsoft and OpenAI of copyright infringement for training their AI models on Times data. This prompts me to remind everyone of what "training" really means. Most people have a vague idea that AI models just trawl the internet and ingest whatever they find, which then gets analyzed and regurgitated by a vast robotic algorithm. And that does happen. But there's way more to it.

AI models are useless without a huge amount of human assistance. In particular, they rely on vast and expensive amounts of annotation, sometimes called labeling, that explains to the AI just what it's seeing. This is a roughly $3 billion (and growing) business populated by dozens of specialist companies like Scale, LabelBox, CloudFactory, and others.

There are several different types of annotation:

- Text, including semantic, intent, and sentiment annotation. Semantic annotation is the simplest kind: armies of human annotators are asked to tag, say, all song titles or all proper names or all telephone numbers in a piece of text. Intent annotation involves telling the AI whether a sentence is a question or an assertion or an opinion. Sentiment annotation is about tagging emotions. This tells the AI whether, say, a review is positive or negative, or whether a statement is an insult or a sarcastic compliment.

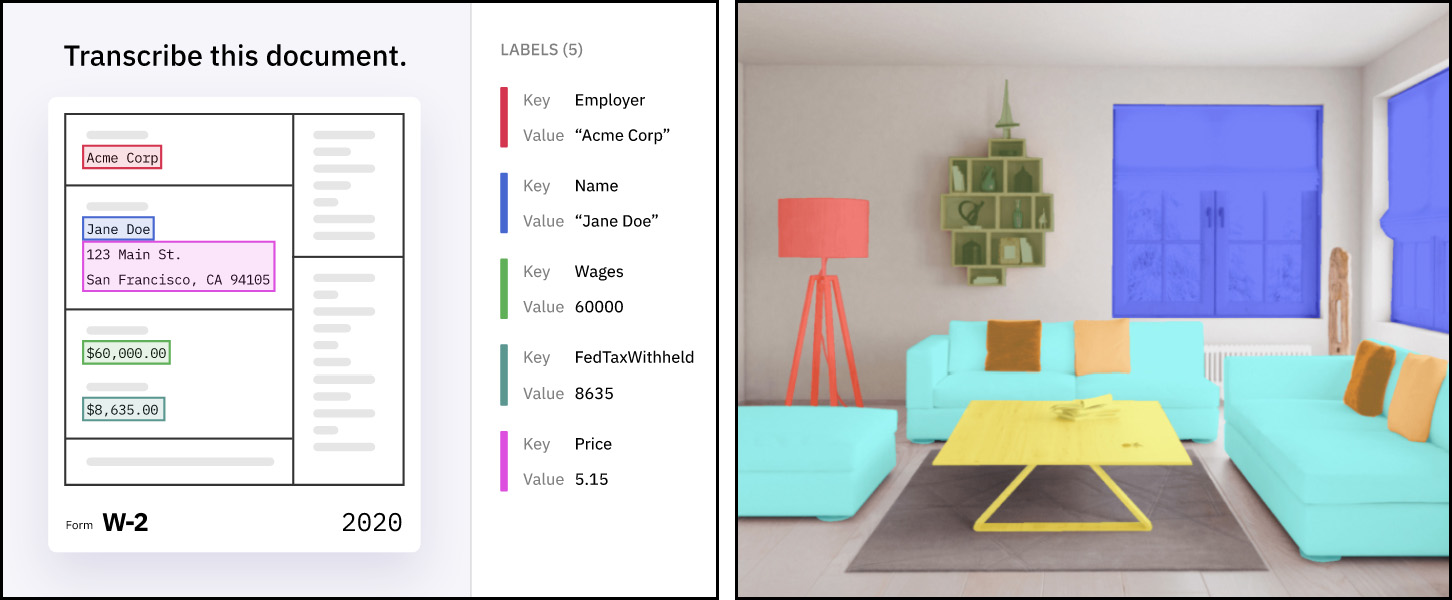

- Image, which is most commonly object recognition. This can be simple or detailed, such as tagging buildings in an image (simple) or tagging in detail all the parts of a building (doors, windows, ledges, etc.).

Examples of text annotation (left) and image annotation (right) from Scale, one of the leaders in the AI annotation industry. - Video, which is similar to image annotation. This is one of the most common types of annotation because it's used in huge volumes by driverless car companies. In many cases, video is literally tagged frame by frame in order to recognize motion and change over time.

- Audio, which mostly involves classifying different types of sound (music, human voices, animal sounds, police sirens, etc.).

Routine labeling is done by armies of English speakers in developing countries for a couple of bucks an hour. Popular sites include Kenya, India, and the Philippines. High-end labeling for things like law, medicine, and other specialized fields, is mostly done in the US and can pay pretty well.

The holy grail, of course, is automating the annotation process so that humans are no longer necessary. So far, only about a quarter of annotation is done via software, but that's likely to grow as AI models become more and more capable.

The point of all this is that "training" is not merely the act of hoovering up data and then spitting it back out. It's a laborious process that's useless unless a lot of value is added in the form of human labeling. Among other things, this is one of the reasons that I think training AI on copyrighted information is OK. It's truly a transformative process, not merely a simpleminded and derivative copy and paste.

But you're still using material originally generated by others, --in schools textbooks are paid for.

Do AIs have a right to exist?

Best among the data entry jobs we have. No internet connection is required to do the job. Just, once download the files on your desktop or any device. Present the work bx02 to us in fifteen to thirty days duration of time. The size of the page is A4 size. The money for the page is offered from $ 10USD to $20USD according to the plan. Just present eighty-five percent vx02 perfection, Simple way to earn money.

Here.......................... https://paymoney33.blogspot.com/

Intellectual property protection is quite a racket in America.

You do realize that both Europe and Japan are actually significantly worse when it comes to copyright, dont you?

To me it's simple. The AIs are "reading" things. Written material is published for the core purpose of allowing it to be read. I don't see that the identity of the reader (as a computer rather than a human) makes any difference. Merely reading a copyrighted work, and learning things from it, CANNOT be a copyright violation.

It's only learning if it's sentient, otherwise it's tweaking.

Give me a rigorous definition of "sentience" (and of "tweaking", for that matter) and I might join you.

'Tweaking' is externally banged into something that doesn't care one way or the other about it, 'sentience' is massively redundant error correction for the purpose of self-determination.

To me it's simple. The AIs are "reading" things. Written material is published for the core purpose of allowing it to be read.

Couldn't agree more. It's a lot like music. If you play or perform, it's meant to be heard, and it goes into the musical hive mind. And enriches it.

If you're producing creating output for money, you shouldn't expect other people to refrain from making a buck from the improvement in their own creative output that flowed from consuming yours.

Having worked in this sort of business for a long time, I agree entirely that "curation" is a HUGE -- and hugely unrecognized -- part of the business. The present hype about AI reminds me of the overexcited and badly uninformed jabbering about "big data" (remember that?). You need PEOPLE in the loop, and a lot of them, or it's basically GIGO.

As to where it moves from "learning" to "plagiarism," I'm really not sure. On the one hand, amalgamating and extending the thoughts of others is not plagiarism. On the other, taking the thoughts of others and merely rewording them, or salting them with a few details, or stapling them onto merely reworded thoughts of others with no original content, IS plagiarism. I suspect that with current AI, the difference depends heavily on the details of implementation.

A curious aspect to this is that, all other things being equal, the larger the training set, the less likely for a model to reproduce a member of that set verbatim.

For what it is worth, copyrights protect the exact expression or wording, not the thoughts or ideas expressed.

You mean -- drumroll! -- just good 'ol-fashioned ETL? Extract, translate, load? Whodda thunk /s

Present AI models may violate the EEC’s “right to be forgotten”. At present there is no way to make an AI model “forget” a single distinct fact among all the information it has “learned”.

Noble as the "right to be forgotten" may be, I've always found it quixotic.

Just like you can't make a salesperson forget a customer,

So I believe I pointed this out to you about 5 years ago as a reason why AI is not just over the horizon (or, in that instance, only 5 years off). Nothing has changed.

Well, except for the hallucinations.

Good info from KD.

Let's not analogize strictly from humans. Yes, a human reading the NYT is fair use, but that law was made when only humans could read.

Maybe some laws should change, it won't be good us if AI titans scoop up all the money.

Laws are there to serve us, we don't exist to serve the law.

How would the law differentiate from Google scooping up all the titles, blurbs, and putting the words in their search engine; and the language models, tho?

Because Google is then linking back to its sources.

Bing Chat, Microsoft’s public facing version of ChatGPT, does provide citations and links to its sources. And I use them because I don’t trust it to be accurate.

You are largely wrong, though not completely. LLMs don't use labeled data at all in the bulk of their training. You are perhaps thinking of other, non-generative, AI, especially what is called supervised learning.

Where LLMs do use labeled data is after the heavy lifting has been done and the model is being refined for specific contexts.

Here's a summary from an industry doc:

Theoretically, large language models can assume functionality without labeled data. Models like GPT3 use self-supervised or unsupervised learning to develop capabilities of understanding natural languages. Instead of training with annotated data, it uses the next-word prediction approach to learn how to complete sentences in the most contextually-logical manner.

However, if you want an LLM to be a chatbot for your company's tech, you do the annotated fine tuning as a final phase of training.

You need not labeling, but testing of the associations so that the model hasn't 'learned' the wrong thing.

That's still people.

+1. What Kevin describes (labeling) is common practice for other types of ML training, but is generally not part of LLMs. Look up RLHF if you want to understand the part that is most intensive in human labor.

Indeed, one of the main breakthroughs of LLMs has been figuring out how to train a model /without/ needing massive amounts of labeled training data. This is called "unsupervised learning".

This is one of the reason why AI interpretation of non-visual sensory data has been slow to develop: Humans have trouble identifying the data, so labeling is slow to develop.

So stuff like lidar and radar hasn't been trained as well on the same basic inputs.

Pingback: Speak for yourself Kevin, AI is not harder “than I” think | Zingy Skyway Lunch

I disagree about the copyright issue. It is exactly the tech industry (along with entertainment) that has pushed for the most extensive application of intellectual property. If you use someone's work in an effort to make money (and AI is nothing if not that) you should owe licensing fees.

This is not ideal from a creativity point of view but this is how intellectual property has been enforced across the board for may years now. All of a sudden just in time the Silicon masters want to execute a 180 degree turn exactly when it suits their business interest.

Apple seems to be also in on the idea that copyrights need to be enforced if they benefit Apple but not if Apple benefits from them being unenforced and if the victim is a small company that has limited ability to pay lawyers.

Training an AI may be harder than you think, but it can't possibly be harder than training Nikki Haley.

Which is why an AI candidate may be the future of the Republican Party. The human kind clearly aren't up to the job.

Nope, not creating an exTwitter id to look at some random exTweet.

I never had an account on Xitter, and the link works for me, including playing the video.

Like Reagan!

I'm going to assume they didn't get a single digital subscription and then allow all their employees access. That has to violate the ToS. Does the NYT have a subscription tier that would permit that?

I'd say it's questionable whether getting a single subscription and then copying every single available page on the site qualifies as "fair use." I assume they consulted lawyers first and handled the tagging by releasing single photographs or segments of article, because otherwise you "fair use" the whole digital archive and then grant free access to it for your employees/subcontractors, which no longer seems fair use. That would help explain how poor these AIs are at structures past the paragraph level, if nobody is tagging an entire article or essay or book as a single item.

But if tagging is transformative, then could I simply buy a single subscription to a website, rip all the pages and set them up on a separate server, run a script that labels all the words based on part of speech, and then offer paid access to that "transformed" content? What if I pay thousands of people to do the same work by hand? Is that fair use, but only if the purpose is to train an AI, not to make the content available to the public without the NYT receiving a dime? These questions seem linked to the claim that it's OK for a government to keep a complete automated digital record of all its citizens without violating any constitutional right to privacy, on the grounds that privacy is only violated when a human being looks at the record.

There's a linked issue: if I could read a NYT article, and then replicate myself millions of times, with each of those copies having read the article, is that like providing the article for free to millions of people? What if I were instanced and only came into existence briefly when someone asked me a question about the news?