Hum de hum. Facebook. I'm truly on the fence about Facebook and disinformation, and I feel like I need to write down some things just to clear my head a little bit. If you feel like following along, take all of this as a bit of thinking out loud. I have very few firm conclusions to draw, but lots of questions swirling around that have influenced how I think about it.

First off, my one firm belief: Facebook is a private corporation and has the same First Amendment rights as any newspaper or TV station—or any other corporation, for that matter. This means that I oppose any content-based government regulation of Facebook, just as I oppose content-based regulation of Fox News or Mark Levin, no matter how hideous they are.

I remain vaguely appalled at the lack of serious research into the impact of Facebook and other social media platforms on politics and disinformation. There's just very little out there, and it surprises me. I know it's hard to do, but social media has been a big deal for nearly a decade now, and I would have expected a better corpus of rigorous research after all this time.

It turns out, however, that there's one thing we do know: social media has a clear positive impact on getting out the vote. Whatever damage it does needs to be weighed against this.

The Facebook empire is truly gigantic. For ordinary regulatory purposes, it should be treated as a monopoly.

I've been uneasy from the start with the Frances Haugen show. Partly this is because it's been rolled out with the military precision of a Prussian offensive, and that immediately made me skeptical of what was behind it. This might be unfair, but it's something that's been rolling around in my mind.

I've also been unhappy with the horrifically bad media reporting of Haugen's leaked documents. There's been almost a synchronized deluge of stories about Instagram being bad for teenage girls, a conclusion that's so wrong it's hard to know where it came from. The truth is simple: On one metric out of twelve (body image), Instagram had a net negative effect on teen girls. On the other eleven metrics it was positive. And it was positive on all twelve metrics for teen boys.

It's hard to draw any conclusion other than the obvious one: Instagram is a huge net positive for teens but has one or two problem areas that Facebook needs to address. That's it. But this is very definitely not what the media collectively reported.

I've read several studies suggesting that Facebook spreads more disinformation than other platforms. However, it's not clear if this has anything to do with Facebook's policies vs. its sheer size. One study, for example, concluded that Facebook generated about six times more disinformation than Twitter, but that's hardly a surprise since Facebook has about six times the number of users.

And YouTube is largely excluded from these studies even though it's (a) nearly as big as Facebook, and (b) a well-known cesspool. I assume this isn't due to YouTube being uninteresting. Rather, it's due to the fact that it's video and therefore can't be studied by simply turning loose some kind of text-based app that analyzes written speech.

There are lots of social media platforms, of course, but disinformation is always going to be most prevalent on those that appeal to adults (aka "voters"). Just by its nature, Facebook will attract more disinformation than TikTok or Snapchat.

All that said, there's no question that (a) Facebook and its subsidiaries account for a gigantic share of the social media market, and (b) they have a legitimate vested interest in keeping users engaged, just like every other social media platform. So where should their algorithm draw the line? What's the "just right" point at which they're presenting users with stuff they're interested in but not pulling them into a rabbit hole of right-wing conspiracy theories?

This is a genuinely difficult question. I can be easily persuaded that Facebook has routinely pushed too hard on profits at the expense of good citizenship, since I figure that nearly every company does the same. But I don't know this for sure.

Nonetheless, Mark Zuckerberg scares me. The guy's vision of the world is very definitely not mine, and he seems to be a true believer in it. This is the worst combination imaginable: a CEO whose obsessive beliefs line up perfectly with the maximally profitable corporate vision. There's just a ton of potential danger there.

Zuckerberg is also way too vulnerable to criticism from right-wingers who have obviously self-interested motives. I suppose he recognizes that they're as ruthless as he is, and is trying to avoid a destructive war with the MAGA crowd. He just wants to be left alone to change the world and make lots of money.

Facebook is a big source of misinformation and conspiracy theories. That's hardly questionable. But conspiracy theories have been around forever and were able to spread long before social media was around. I wrote about this at some length here, and it's something I wish more people would internalize. In the past conspiracy theories were spread via radio; then newsletters; then radio again; then email; and eventually via social media. And those conspiracy theories were no less virulent than those of today. (Just ask Bill Clinton.) The only real difference is that Facebook allows conspiracy theories to spread faster than in the past. But then again, everything is faster now, which means the pushback to conspiracy theories is also faster.

There's another difference: Facebook makes all this stuff public. In the past, most people didn't really know what kinds of conspiracy theories were being peddled until they finally got big enough to appear on the cover of Time. That's because they were spread via newsletters that were mailed only to fellow true believers. Ditto for email. Ditto for radio if you didn't listen to the late-night shows where this stuff thrived. So we all lived in happy ignorance. That's gone now, because Facebook posts and comments are all instantly available for everyone to see. That makes it scarier, but not really all that different from the past.

As always, I continue to think that the evidence points to Fox News and other right-wing outlets as the true source of disinformation. Facebook disinformation mostly seems to stay trapped in little bubbles of nutcases unless Fox News gets hold of it. That's when it explodes. Facebook may be the conduit, but most often it's not the true source of this stuff. Traditional media is.

Also Donald Trump.

I mean, just look at polls showing that two-thirds of Republicans think the 2020 election was stolen. Two thirds! There's no way this is primarily the fault of social media. It's Fox News and talk radio and Donald Trump and the Republican Party. Facebook played at most a small supporting role.

Remember: this is just me doing a brain dump. I'm not pretending everything here is God's own truth. It's just the stuff that I've seen and read, which influences the way I've been thinking about Facebook and social media more generally. Caveat emptor.

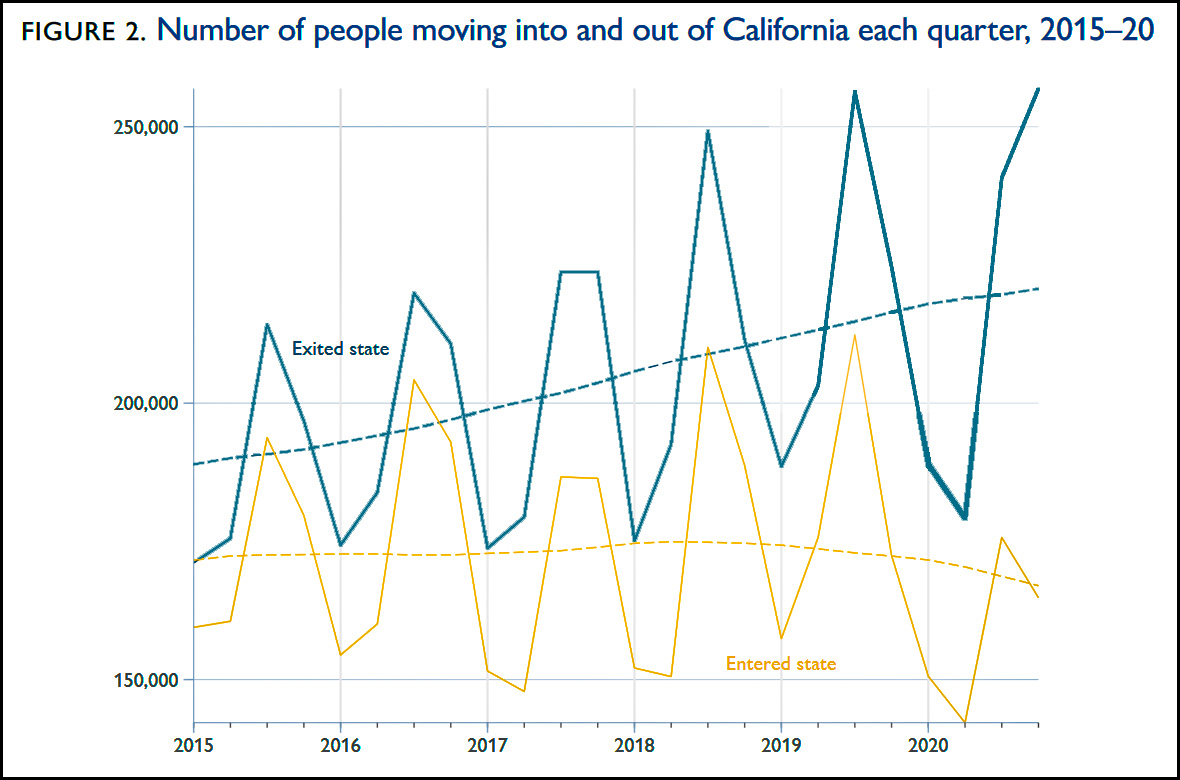

Nothing much to see here. High housing prices have been the cause of increasing migration for many years, especially from the Bay Area. It's an old story. If anything, however, the growth rate slowed a little bit in 2020. And polling data shows the same thing. There's just no evidence that the pandemic has caused Californians to reassess much of anything.

Nothing much to see here. High housing prices have been the cause of increasing migration for many years, especially from the Bay Area. It's an old story. If anything, however, the growth rate slowed a little bit in 2020. And polling data shows the same thing. There's just no evidence that the pandemic has caused Californians to reassess much of anything.