Tyler Cowen points today to an essay by Dean Ball about regulation of AI. Basically, Ball is afraid of endless upward ratcheting. A seemingly limited law—like California's, which proposes to regulate AI models that could produce WMDs and similar catastrophes—is likely to grow over time:

Let’s say that many parents start choosing to homeschool their children using AI, or send their kids to private schools that use AI to reduce the cost of education. Already, in some states, public school enrollment is declining, and some schools are even being closed. Some employees of the public school system will inevitably be let go. In most states, California included, public teachers’ unions are among the most powerful political actors, so we can reasonably assume that even the threat of this would be considered a five-alarm fire by many within the state’s political class.

....So perhaps you have an incentive, guided by legislators, the teachers’ unions, and other political actors, to take a look at this issue. They have many questions: are the models being used to educate children biased in some way? Do they comply with state curricular standards? What if a child asks the model how to make a bomb, or how to find adult content online?

Ball looks at this from a public choice framework: what are the regulators incentivized to do? Regulate! So they'll always be looking around for new stuff to tackle.

That's fine, but I don't think you need to bother with this framework. I know that most people still don't believe this, but AI is going to put lots of people out of work. Lots and lots. And when that happens, one of the responses is certain to be government bans on AIs performing certain tasks. After all, governments already do this, protecting favored industries with tariffs or licensing requirements or whatnot. How hard would it be to mandate the continued use of human doctors and human lawyers even if someday they aren't necessary? Those folks have more than enough political clout to get it done.

On the other hand, taxi drivers, say, don't have a lot of political clout. So driverless cars might well take over their jobs with no one willing to do anything about it. Sorry about that.

Any way you look at it, though, there's someday going to be lots of pressure to preserve jobs by regulating robots and AI. Maybe in ten years, maybe in five years, maybe tomorrow. But it's going to happen.

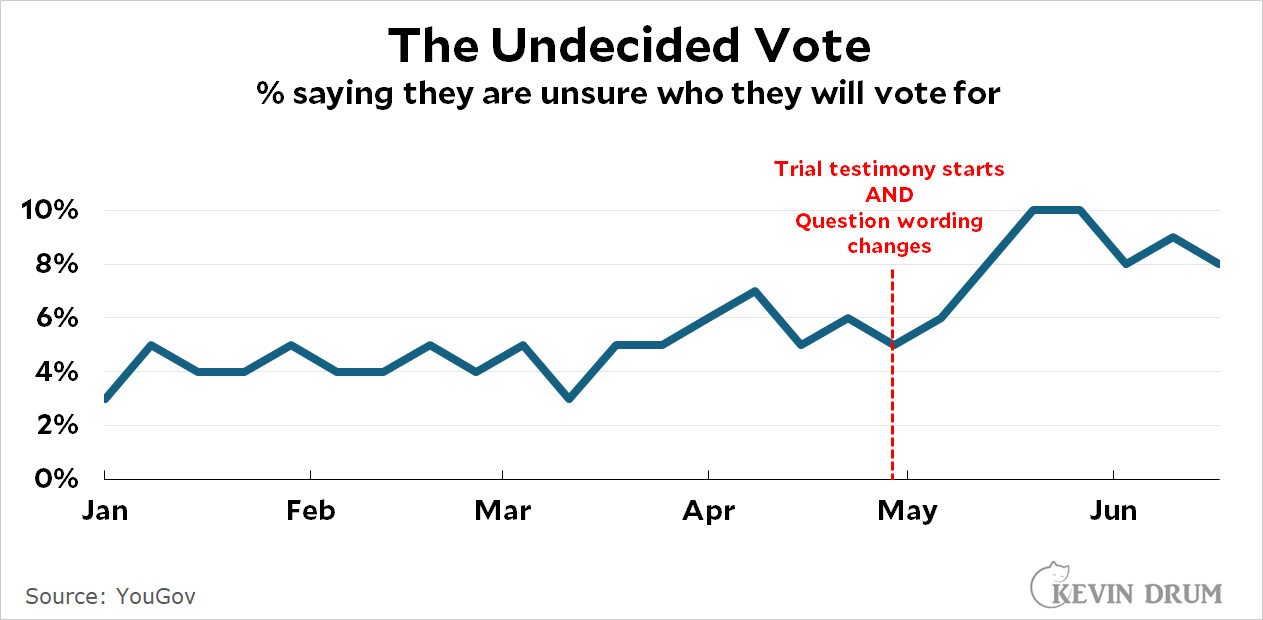

Unfortunately, two things happened at the same time in late April. First, testimony began in Trump's hush money trial. Second, YouGov changed its question wording slightly, from "who would you vote for?" to "who do you plan to vote for?"

Unfortunately, two things happened at the same time in late April. First, testimony began in Trump's hush money trial. Second, YouGov changed its question wording slightly, from "who would you vote for?" to "who do you plan to vote for?"