A few days ago the New York Times ran a piece about how doctors were using ChatGPT in their work. Many of them, it turns out, aren't using it to help with diagnoses. They're using it to help them talk to patients better. One of them is Dr. Michael Pignone at the University of Texas at Austin:

He explained the issue in doctor-speak: “We were running a project on improving treatments for alcohol use disorder. How do we engage patients who have not responded to behavioral interventions?”

He asked his team to write a script for how to talk to these patients compassionately. “A week later, no one had done it,” he said....So Dr. Pignone tried ChatGPT, which replied instantly with all the talking points the doctors wanted.

....The ultimate result, which ChatGPT produced when asked to rewrite it at a fifth-grade reading level, began with a reassuring introduction:

If you think you drink too much alcohol, you’re not alone. Many people have this problem, but there are medicines that can help you feel better and have a healthier, happier life.

That was followed by a simple explanation of the pros and cons of treatment options. The team started using the script this month.

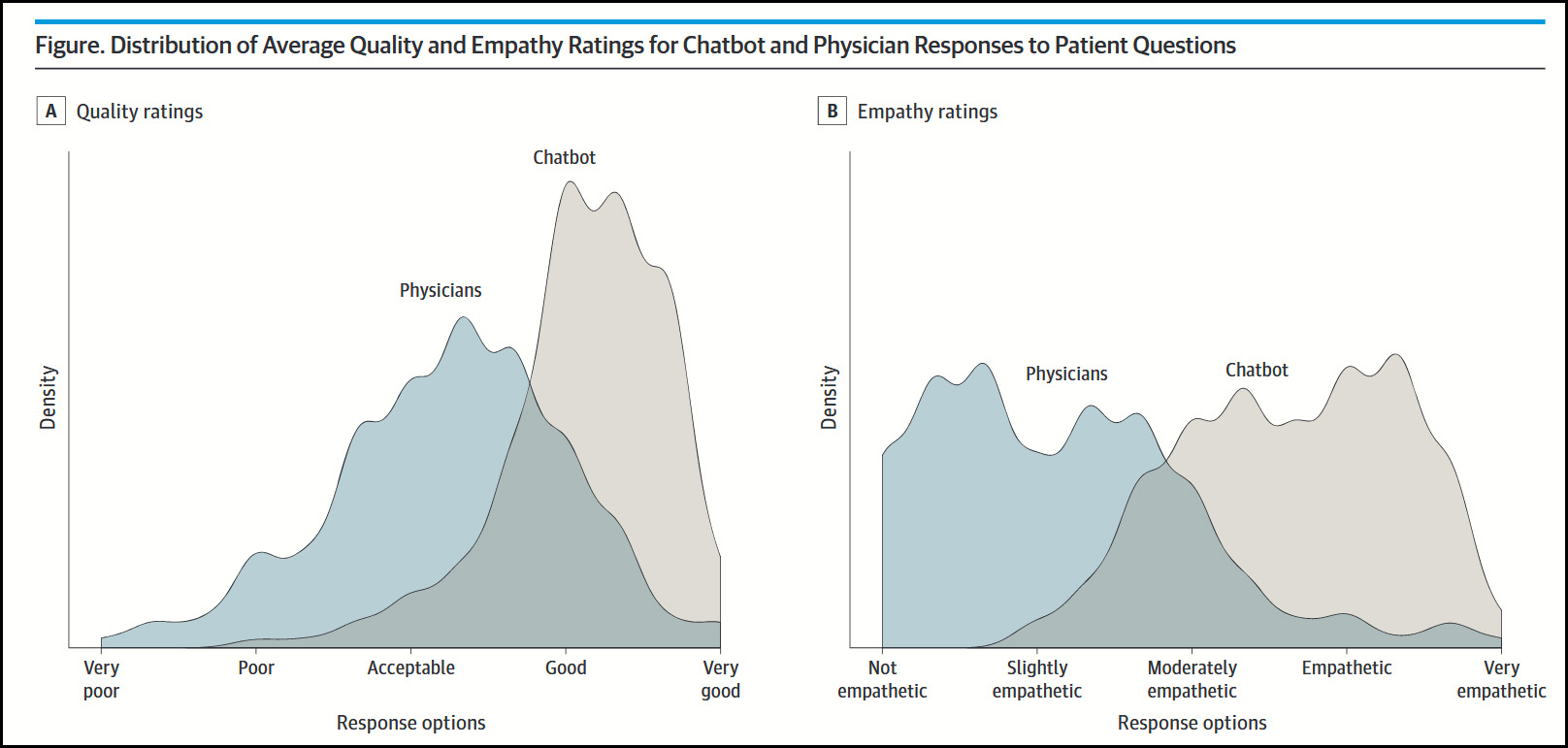

This won't come as a surprise if you remember a post from a few weeks ago about a study of ChatGPT's ability to communicate with patients. People were presented with several scenarios and shown both a doctor's explanation and ChatGPT's explanation. By a wide margin, even doctors agreed that ChatGPT was better.

ChatGPT is also handy for routine scutwork like writing appeals to insurance companies, which it can do in seconds. As one doctor put it, “You’d be crazy not to give it a try and learn more about what it can do.”

ChatGPT is also handy for routine scutwork like writing appeals to insurance companies, which it can do in seconds. As one doctor put it, “You’d be crazy not to give it a try and learn more about what it can do.”

Dedication, knowledge, and ability don't necessarily confer empathy and communications skills. If everybody in any public-facing job could glom onto that, it would be great.

I can make two hundred USD an hour working on my home computer. I never thought it was possible, but my closest friend made seventeen thousand USD in just five weeks working on this historic project. convinced me to take part. For more information,

Click on the link below... https://GetDreamJobs1.blogspot.com

Looks like a step forward! Don't expect your bills to decrease any, however.

The plot doesn't make sense on the face of it. If there are only discrete options, such as those listed at the bottom, there should be bars, not complicated curves. Even if the responses were from one to ten there would be bars - or did people give responses to the second or third decimal place? The plot on the right has four bimodal peaks when there are five options. Maybe the people who wrote the paper should have had a chatbot do it.

There were 195 items, each rated from 1 to 5 by respondents. So it is a histogram with 4*195+1 bins (I think).

https://jamanetwork.com/journals/jamainternalmedicine/article-abstract/2804309?guestAccessKey=6d6e7fbf-54c1-49fc-8f5e-ae7ad3e02231&utm_source=For_The_Media&utm_medium=referral&utm_campaign=ftm_links&utm_content=tfl&utm_term=042823

Seems like the issue is (a) “A week later, no one had done it,” meaning they hadn't even bothered to do the task they had been assigned, which will tend to make anything more difficult, and (b) Doctors aren't writers, so maybe if you want something written you need to ask a writer and not a doctor. So basically this is a story in which "We asked people to do something they had very little experience in, they didn't know how to do it, so then we did an advanced Google search."

"...asked people to do something they had very little experience in..."

Really? I expect that doctors who treat patients ought to have some experience talking to them.

It might startle you to realize that writing and speaking are two different things.

This is ht kind of stuff GPT should be good at. You can ask it to write at a lower grade level than someone with a degree would have some trouble accomplishing. Also, GPT doesnt need to sleep or have a family. Ask a doc to write something, which will be in addition to normal work and they have to find time and motivation. Not so for a computer. (I think that if you asked GPT to do something for engineers on an engineering project or scientists on a science project you would see the same thing. Businesses have people designated to write stuff for the public.) Also, keep in mind this isn't really GPT talking with people, it is GPT writing up something.

Steve

It will have to be checked for "hallucinations".

I think that's important. If you get a a ChatGPT result, check it carefully for accuracy and completeness against the actual information from the company providing the treatment and how to use it, and use it as a script, it's a useful tool. But you really need that "curating" of ChatGPT's output before you turn it loose. Given the tendency of ChatGPT to suddenly go off the rails the NEXT time it's handed an assignment you don't want patients directly using it for research. Lock down the script in a PDF file or something and use that for reference and handing out to a patient.

“…handy for routine scutwork like writing appeals to insurance companies, which it can do in seconds.”

After all this time they had never bothered to create some basic forms and templates for this stuff?

And what, this thing spits out a script for my doctor to read to me when they give me the bad news? And I won’t notice that? Does it provide acting lessons?

Psst, it probably already has and you didn't notice

I’m a healthy guy so I’ve not yet had the pleasure of being lied to or patronized by a doctor using this feature. Still, most people can tell when someone is blowing smoke up their ass. Especially in person. Most people are bad lairs. That’s why doctors feigning empathy is a problem, I suppose. It take more than a script. It requires body language and tone. Empathy doesn’t come from strangers, generally.

To me anyway, hearing someone say “…you’re not alone. Many people have this problem…” is a big old red flag of bullshit.

Actually they did come up with such templates: This is how ChatGPT "discovered" it.

I read this post expecting to see some sort of break through. It would be truly nice to have more convincing ways to help people deal with a problem.

And what is the result? The result is: "Don't worry, there are many people who have your problem". Anybody with half a brain ignores this line and waits for the rest of the advice, hoping there would be something usable in there.

Gosh! They had an entire team and nobody came up with this ubiquitous phrase and hollow common place (or maybe nobody considered it a valuable proposal--with good reason). And then "AI" saved the day by rediscovering this platitude. I don't think there is anybody alive in America (and beyond) who has never been told some variation of this bromide.

Of course this is entirely predictable: Chat GPT spits out common phrases that have been used often in the past because this is how it was "trained".