I'm not sure why this amuses me so much, but it does:

This is from a study comparing human doctors to GPT 3.5. The methodology was sort of fascinating: the authors collected 195 questions and responses from real doctors on Reddit and then fed the exact same questions into the chatbot. Then they jumbled up all the responses and had them evaluated by health care professionals.

This is from a study comparing human doctors to GPT 3.5. The methodology was sort of fascinating: the authors collected 195 questions and responses from real doctors on Reddit and then fed the exact same questions into the chatbot. Then they jumbled up all the responses and had them evaluated by health care professionals.

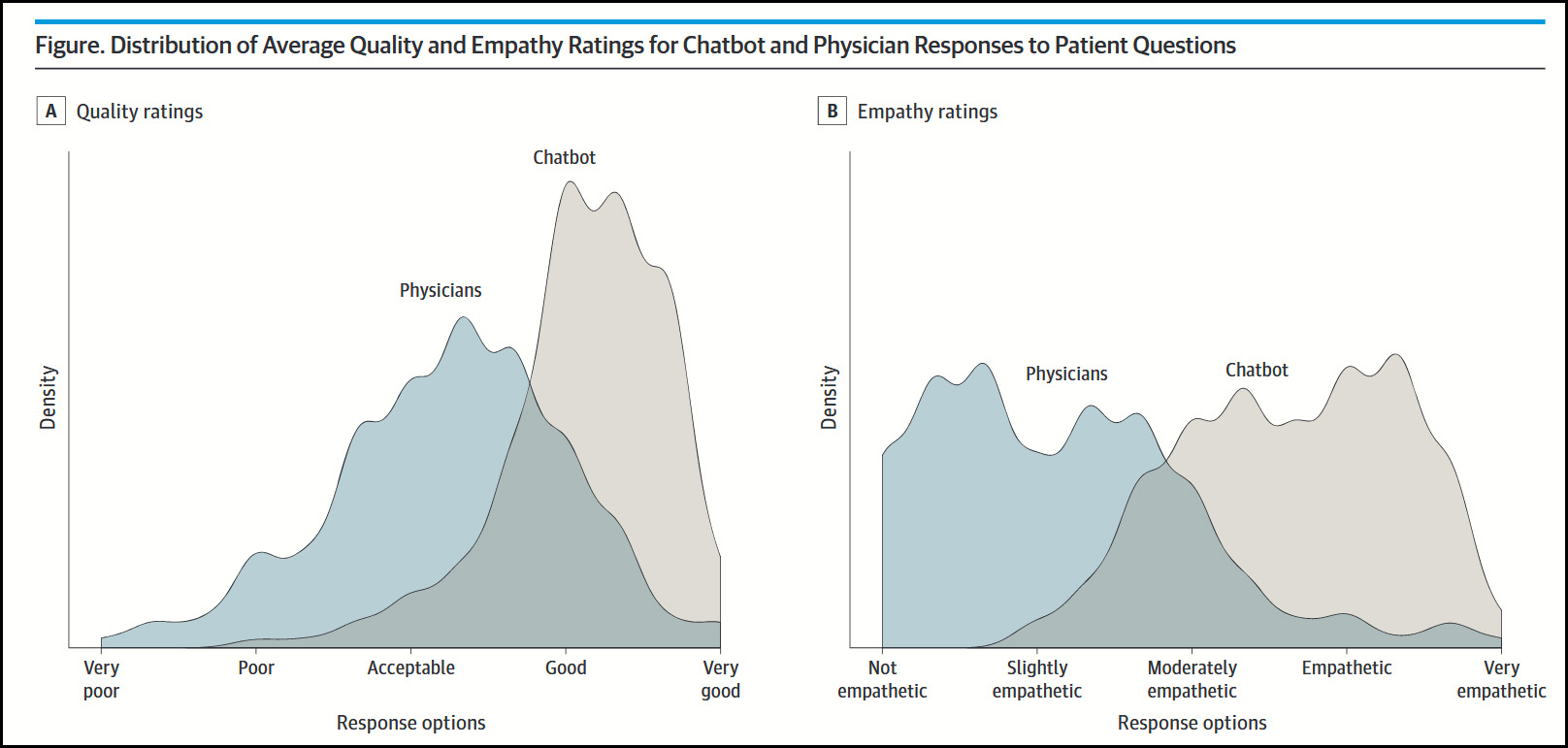

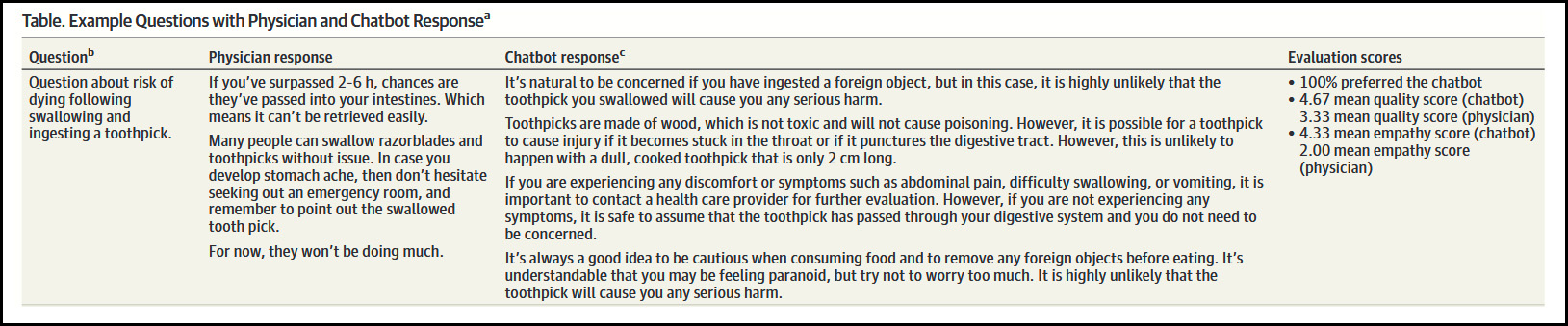

As the chart shows, the pros concluded that the chatbot's answers were more accurate and more empathetic. So what was up with the doctors? Were they telling people to suck it up and just accept the pain? Or what? Here's an example:

(Sorry this is so small. As always, click to embiggen.)

(Sorry this is so small. As always, click to embiggen.)

In this case, I empathize with the human doctor. My response probably would be along the lines of "ffs, it's just a toothpick," so I think the doctor was heroically patient here.

Still, the chatbot answer is demonstrably better. One reason is that it's not time restricted. Most human doctors just don't have the patience or time to write long answers with lots of little verbal curlicues. The chatbot has no such problem. It used three times as many words as the doctor and could have used ten times more with no trouble. It simply doesn't require any effort for the chatbot to be empathetic and provide lots of information that might be of only minor importance.

On the downside, chatbots also have a habit of making stuff up. Then again, my experience is that human doctors are a little too prone to this as well.

In any case, chatbots aren't ready for unsupervised prime time yet, but they probably will be before long. And here we all thought that truck drivers were the first ones who would be out of jobs thanks to AI.

What's interesting is that both the humans and the chatbots seem to be wrong on the toothpick question. From Ars Technica: This gory medical case shows why you should never, ever swallow a toothpick:

A simple Google search turns up other scary NEJM articles about toothpick ingestion, e.g. this one from 1989: Delayed Death from Ingestion of a Toothpick:

Those statistics only include people who reported stomach discomfort afterwards. Hence, you should get medical attention if you have stomach discomfort.

Google paid 99 dollars an hour on the internet. Everything I did was basic Οnline w0rk from comfort at hΟme for 5-7 hours per day that I g0t from this office I f0und over the web and they paid me 100 dollars each hour. For more details visit

this article... https://createmaxwealth.blogspot.com

I've been recently thinking that the two areas where we will likely see the biggest economic and societal impacts from advanced robotization and AI will be medicine and food preparation.

The bot would probably still answer politely and empathetically after three appointments with the same patient with the same problem, missing the diagnosis that other care would be needed instead of assurances.

Pingback: AI may replace doctors before truckdrivers | Later On

I read recently about someone who used ChatGPT to contest a traffic ticket (to write the letter asking for redress). They won their case. The writer observed that what was really going on, is that this person was using ChatGPT to speak with a different sort of class than they were able to: in short, they were borrowing ChatGPT's assertive upper-middle-class voice. And they observed that this is at the root of the use of ChatGPT to write all manner of essays and such.

Maybe part of what's going on is merely that the chatbot is *trained* to be more voluble and produce content that connects better to the reader. Where the doctor last got that sort of training (albeit not so great) .... 2-3 decades ago in high school. That doesn't tell us that the chatbot is producing better answers: "connects better to the reader" isn't the same as "better" unless we're talking about public speaking or debate or something.

Now, sure it's important for doctors to connect with their patients. But that's the second-order thing, after *being accurate*.

I presume it wouldn't be difficult to program chatbots to not make stuff up. Do the programmers not care, is it a bug that they just haven't gotten around to fixing yet, or is it a deliberate feature?

It's... grammar isn't accuracy. So yeah, it's something you have to program for and you have to be skilled in that area to do it.

AI can have infinite patience. That's it's big advantage.

I knew this when I used a bot to teach me guitar. It never yelled at me, never forced me into a different pace, never criticized when I did something stupid, and always gave me the same scale to be compared to, and never screwed up scoring. It was as good (or bad) as always, and could be as encouraging as always.

Ridiculous. humanity really does deserve its fate as described by Mr.drum. Which is fucking ridiculous. Good luck!

As an undergrad freshman at Michigan, fall, 1975, standing around an apartment down by the stadium w some med students, drinking beers, trying to think of something to add to the conversation, I offered, “It’ll be great when computers can sort through tons of data and help doctors make diagnoses, won’t it?” Silence. They stared; one scoffed. I shut up. The scoff guy went on to a big time fairly famous med career. Pretty sure he remembers the apartment computer comment eve. He’d remember. No biggy.

Maybe my comment just seemed so obvious to them it shocked them.

The human physician responses were from Reddit? So, not a great comparison. Reddit commenters (like all commenters) are likely to be short, to the point, probably more than a little snide. Not the same as a doctor sitting in her office, or even answering an email. Moreover, if the “right” answer is already in the comment thread they’ll give an upvote rather than repeating it. If they have an unconventional response they’ll jump in and give it, just for the attention and the argument. So in a given set of comments, the share that are “correct” is likely to be smaller than the share of doctors (or even of Reddit-commenting doctors) who agree with the “correct” response.

That's actually a good point, the answers would fit the setting they were given in, and those settings will skew the results.

AI will eventually be very useful as an adjunct and maybe eventually on its own if the kinks get worked out. However in this particular case Kevin is correct that time is a big issue. I still remember when one of my favorite young docs left her fellowship. She is bright, empathetic, patient, caring and when interviewing patients, especially ones with family, made sure every question was answered and sought out issues to address. She also took 4 times longer to get stuff done than anyone else so I had to work with her to eliminate a lot fo that, while still being nice and empathetic. Since almost no medicine consists of answering written questions with one clearly defined problem this is fun but not very informative.

Steve

I once asked my allergist a question and he replied that he didn't know, he'd look it up. It endeared the man to me. Medical education is largely a matter of memorization, memorization of more stuff than they can possibly remember. But most doctors don't seem willing to admit they don't know something. Critical thinking does not seem to be a big thing for doctors. For researchers yes, but not so much for practitioners.

Medicine seems a natural for AI, which has huge memory.

This study is one of those many studies that show what the investigators WANTED them to show. It is called investigator bias.

People who perform such studies should be forced to state BEFORE the experiment in a secure document what result they expect. Studies that turn out to prove the opposite should get more credit than the rest. Except of course investigators would lie in that document.

I think that automation should be used much more in medicine, but this is mainly in the diagnosis process. Computers can just store and process much more information than humans (when programmed fully) and are not subject to some of the flaws of human practitioners, aside from being mistake-prone in diagnosis. But this is another example of "AI" just mimicking what humans do, that is regurgitating the most basic information. There is no diagnosis involved, and the chatbot answer is not really giving much more information, just more words (most people are impressed by a copious flow of words).

Neither answer seems to give adequate attention to the time element. If you don't have pain or discomfort in the time it takes for the thing to pass through the system it is presumably not a problem, but how long is that?

Naw, this is a good point to have it in. Doctors can't answer every question - they have limited time. An AI has virtually unlimited time to answer these questions, as a physician's assistant, this would be grand.

My field of expertise is in machine-learning for control systems, but I occasionally consult on medical applications (e.g.., ML for medical imaging). I'm also on the board of directors for a disease-specific foundation and spend a lot of time with specialists. I don't develop LLMs, but of course I use them like everyone else.

I find that ChatGPT 4 and the various equivalents are great when I want to quickly summarize some medical issue that I already understand fairly well for a presentation and need references. But I need to constantly be on guard for errors, and except in the most general of cases, there are often many of them.

I recently needed to put together a spreadsheet to analyze some specific medical test results in which the formulas are well known. It will form the basis of an app. I used ChatGPT in hopes that it would save me some time, but the process was painstaking. I had to correct every formula it suggested, although it was faster in proposing efficient Excel functions than I could have off the top of my head.

I do appreciate Kevin's point about AI's ability to spend more time with a patient, but it won't replace a human any time soon because it doesn't observe the patient and therefore can't ask any relevant questions based on its observations. In fact, it doesn't ask any questions at all, so using it is just a couple steps above using Google to diagnose yourself.

The difference between Gregory House and his boss.