A couple of months ago a new paper was released that examined the lead-crime hypothesis. Its conclusion is that there's little evidence for an association, and if there is one it's pretty small.

I haven't commented on it, but not because of its negative conclusion. It's because the paper is a meta-analysis. I'm fairly proficient at plowing through papers that look at specific examples of lead and crime, but a meta-analysis is a different beast altogether: It rounds up all the papers on a particular subject and tries to estimate the average result among them. This is a specialized enterprise, which means I don't have the econometric chops to evaluate this paper. I've basically been waiting for comments from someone else in the lead-crime community who is qualified to judge it.

That hasn't happened yet, and I keep getting asked about it. So, with the caveat that I can't evaluate the methodology of this paper at the proper level, here's what it's all about. I'll add some comments and criticisms of my own, but keep in mind that they're extremely tentative.

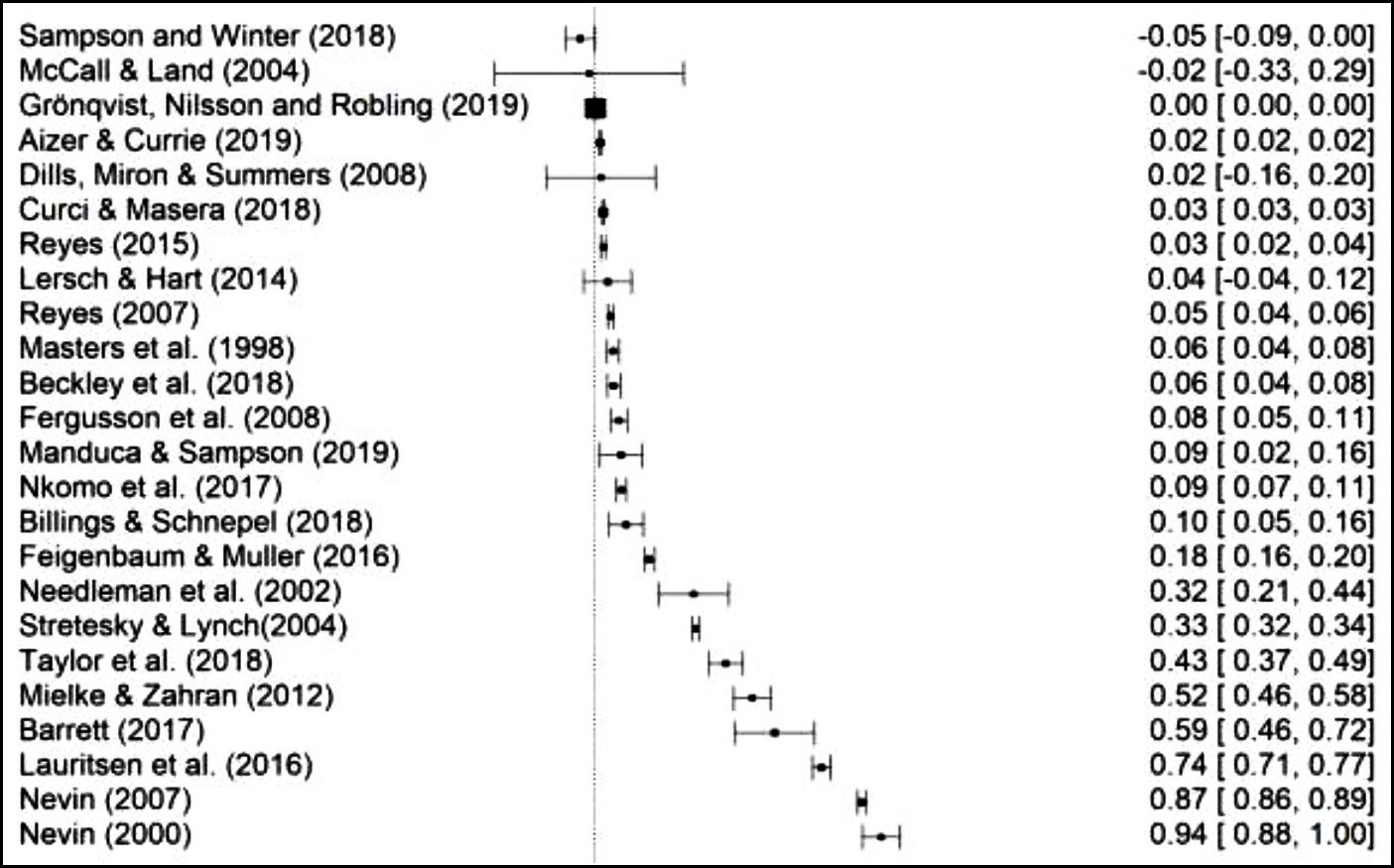

The goal of the authors is to look at every paper they can find that draws quantitative conclusions about the lead-crime hypothesis. Then they take the results and normalize them so they can all be compared to each other on a common scale. Here's their final set of 24 papers:

The first thing I'd note is that I'd probably discard anything prior to 2005 or so, even though that would eliminate the original 2000 paper by Rick Nevin that started the whole thing. Aside from some very rough correlations, there just wasn't enough evidence before then to draw any serious conclusions.

I also note that they left out a good paper by Brian Boutwell, but that may just be one of the seven papers they discarded because the results couldn't be normalized in a way that allowed them to be used. This is a common issue with meta-analyses, and there's not much to be done about it. Still, it does mean that high-quality studies often get discarded for arbitrary reasons.

But none of this is really the core of the meta-analysis. In fact, you might notice that among post-2005 studies, there's only one that shows a negative effect (a smallish study that found a large effect on juvenile behavior but no effect on arrest rates) and one other that showed zero effect (which is odd, since I recall that it did show a positive effect, something confirmed by the abstract). This means that 22 out of 24 studies found positive associations. Not bad! So what's the problem?

The problem is precisely that so many of the studies showed positive results. The authors present a model that says there should be more papers showing negative effects just by chance. They conclude that the reason they can't find any is due to the well-known (and very real) problem of publication bias: namely that papers with null results are boring and often never get published (or maybe never even get written up and submitted in the first place). After the authors use their model to estimate how many unpublished papers probably exist, they conclude that the average effect of lead on crime is likely zero.¹

With the caveat, yet again, that this is beyond my expertise, I don't get this. If, say, the actual effect of lead on crime is 0.33 on their scale (a "large" effect size) then you'd expect to find papers clustered around that value. Since zero is a long way away, you wouldn't expect very many studies that showed zero association or less.²

So that's one question. The authors also state that homicide rates provide the best data for studies of lead and crime, which presumably gives homicide studies extra weight in their analysis of "high-quality" studies. In fact, because the sample size for homicides is so small, exactly the opposite is true. In general, studies that look at homicide rates in the '80s and '90s simply don't have the power to be meaningful. The unit of study should always be an index value for violent crime and it should always be over a significant period of time.

And there's another thing. These crime studies aren't like drug efficacy studies, where pharma companies have an incentive to bury negative results. Nor are they the kind of study where you round up a hundred undergraduates and perform an experiment on them. A simple thing like that is just not worth writing up if it shows no effect.

On the contrary, studies of lead and crime are typically very serious pieces of research that make use of large datasets and often take years to complete. It's possible that such a study couldn't find a home if it showed no effect, but I'm not sure I buy it. Lead and crime is a fairly hot topic these days with plenty of doubters still around. Well-done papers showing no effect would probably be welcomed.

For what it's worth, I'd also note that the lead-crime field is compact enough that practitioners are typically aware of research and working papers currently in progress. They'd notice if lots of studies they knew about just disappeared for some reason. And maybe they have! But if so, they're keeping mighty quiet about it. Someone should ask them.

I have a few other questions about this meta-analysis, but they aren't important. The primary question is pretty simple: Is the lack of negative results due to papers not getting published for one reason or another? Or is it the result of lead having a substantial effect, which makes it very unlikely for a study to show an association less than zero just by chance?

And this is where I have to stop. I can bring up questions about this meta-analysis, but the authors' model is too complex for me to assess. I also don't have enough background in the meta-analysis biz to judge the paper as a whole. Someone else will have to do it, and it will have to be someone who is (a) familiar with the lead-crime field and (b) has the mathematical chops to dive into this. Any volunteers?

¹The paper shows a spike of results right around zero that's not symmetrical. That is, there's a clump of results just above zero and not very many just below. They conclude that this suggests publication bias, which is possible. But it might also just be an example of p-hacking, where researchers putter around in their data until they can nudge it just above zero. This is also a known problem, but it would have only a small effect on the reported effect of lead on crime.

²As it happens, the paper doesn't show this. There's a kinda sorta spike around 0.33, but only if you squint. For the most part, there's just a smooth array of results from 0 to 1. If this is accurate, it is a bit odd, but hardly inexplicable since every study of lead and crime is working with entirely different datasets.

POSTSCRIPT: One unrelated issue that the paper raises is that violent crime in Europe generally rose in the 1990s and aughts, even as gasoline lead decreased. I've read specific papers about Sweden, Australia, and New Zealand that support the lead-crime hypothesis, all of which showed crime declines as lead levels dropped. I also know that violent crime in the UK shows an almost perfect correlation with lead levels as violent crime rose and fell. And of course Rick Nevin has shown good correlations in a number of countries.

This is just off the top of my head, and I don't know the overall data well enough to comment any further—though keep in mind that Europe generally banned leaded gasoline well after the US, so their violent crime rate kept increasing long after the US too. In any case, maybe I'll look into it. If it turned out that most European countries showed little correlation between lead levels in children and violent crime rates later in life, it would be a serious setback to the lead-crime hypothesis.

I would think every "post-lead" increase in violent crime, unless it could be shown to be novel to the time rather than another example of something also from the era of lead would be a small counter-argument for the hypothesis being "the" reason.

As Kevin has pointed out in the context of the United States, the final phasing-out of leaded gasoline in the 90s means that the strong reduction effect on crime should have been over by about 2010. The "lead-crime" hypothesis doesn't hold that other influences cannot have an effect on crime, only, rather, that the huge increase in environmental lead from the 20s through the 60s meant that lead was the prime driver in increased crime levels (through the 1980s, that is, after which, the effect tapered off, resulting in less crime).

So, sure, post-2010 in the US (different dates would apply to other countries), other processes could (and surely will) affect crime levels.

And the lead-crime hypothesis says we should still see high numbers of violent crime among those older than 40...

...And we do.

"The authors present a model that says there should be more papers showing negative effects just by chance."

Huh. I wonder what evidence they give for the validity of this "model" ?

"We think that there ought to be more papers showing that F != ma than we see, just by chance; so let's stipulate the existence of those papers, and voila! F = ma is unsubstantiated!"

Maybe you're not describing it accurately, but the way you describe it, these people are ridiculous.

That’s my takeaway from KD’s post as well. Some sort of publication bias discount factor, or threshold, such that any number of positive results below that threshold means no positive result overall.

I wonder what their methodology for deriving THAT number was ...

Probable evidence is Rick Snyder's money.

I bet the Nerd Governor funded this metastudy to delegitimize the negatory impacts of lead in the runup to his trial over the handling of Flint water contamination.

Thank you -- I was going to make precisely the same point. Unless there's something Kevin hasn't told us, the "model" is facially absurd.

The 'circular filing' of results that don't confirm investigators' hypotheses is a real problem...so much so that a 'Journal of Negative Results' was started to publish these findings. A perhaps more serious problem is tweaking (or even inventing) hypotheses after the data analysis has been done. I don't blame academics...publish or perish (or get stuck as an adjunct or lecturer/instructor) is a real thing and negative results aren't 'sexy'.

Publication bias is a problem, yes, but it doesn't happen at random. Failed attempts at direct replication are reasonably likely to be submitted and published. On the other hand, researchers who hope to extend a published finding, or use it as a methodology in another problem area, are less likely to submit, assuming that they may have done something wrong. But the criteria for inclusion set by the authors of the meta-analysis would have excluded any study that wasn't at least a constructive replication. So my expectation is that publication bias would be a rather small factor.

Honestly, I didn't read far enough to find a description of their bias model, if any was given. Although I used inferential statistics on and off throughout my career, I started out somewhat skeptical of the idea of automating inference from data, and grew more skeptical over the years, I would be much more interested in knowing the particular ways in which the studies that showed little or no effect differed from those that showed meaningful effects. The goal should be understanding, not statistical 'significance'.

Well lets see, when you adjust for population, violent crime began to wave higher in the late 50's. Exploded in the mid-70's to early 80's, went down bumpily for the next 20 years, but not back to the levels of pre-late 50's until the 2000's.

I think violent crime not related to gang activity is where we need to look.

How many of these studies involve controlled experiments on lab animals?

Now you're speaking midgard & atticus language.

Do animals do crime??

Planet of the Apes is a Mutual of Omaha documentary.

They can act in abnormally aggressive and irregular ways that could be characterized, and exhibit lower than average intelligence.

"The authors present a model that says there should be more papers showing negative effects just by chance."

This is the same way that because Republicans keep getting convicted of one kind of corruption after another it's impossible that Hillary isn't even guiltier.

Yes, this is the problem with many economic or statistical models. They start from an unproven and unprovable assumption and reason from there. Result is GIGO.

I’m not sure that murders are necessarily a good indication of crime that induced by organic brain defects or lead consumption. One the one hand, I lived through the “crack wars” and such like in the 1980’s and the insane violence certainly fits Kevin’s hypothesis, particularly rage filled murders within marriages or killings that escalated from trivialities to gruesome murders in the blink of an eye.

But on the other hand, specifically for murders, a good number of the murderers that I became familiar with during that time were not done out of anger but for reasons which were morally abominable but contextually logical. I can see how lead creates the culture of “New Jack City” but that culture wasn’t much different from every other crime wave in that it was fueled by the perception of easy money. Not sure what to think.

During the late 70’s I worked in juvenile hall in Los Angeles. Lots of gang members there including Crips, Bloods, and various Latino gangs. Not unusual for those “kids” to use gasoline to get high.

No wonder those people are so screwed up. I can’t imagine sniffing gasoline. I used it at the one autopsy I attended and wasn’t sure which was more sickening.

I applied for a job as an investigator with the Los Angeles Coroner’s office. I scored really high and part of the job interview was a tour of the morgue. As it happened it was over 100 degrees in LA that day and the morgue was in the basement. The chemical smell was overpowering. To this day when I think about it I can “smell” it.

One of the bodies was some guy who had threatened to jump off a 30 story building. A heroic cop talked him down. He was taken to a hospital and released. He went back to the building and jumped.

You know how in the movies people always go to identify a body and the open one of those drawers where bodies are stored? That’s not what I saw. Bodies were in a large room with metal 4 tier metal trays with the bodies in plastic bags.

I decided I didn’t want that job.

Yeah, I’m one hundred percent with you. I’m not sure if our morgue really smelled like dead humans but it always did to me and I always felt sick on the rare occasions that I had to go inside. It was such a creepy place.

To crib from the Dread Pirate Roberts, anyone pushing a silver bullet answer is selling something.

By narrowing their indicator for violent crime, the authors make a significant change to the research question at the heart of this issue, IMHO. How many of the papers under review focus only on homicides?

So the evidence that more studies showed lead had no effect was that there were not many papers showing lead had no effect? Yah, sure. And we know Hilary planned Benghazi because there was no evidence she did. Meta-studies should always be taken with a grain (or two or three) of salt.

Much like the absence of evidence of fraud in the New Hampshire 2020 election audit is actually proof of fraud in the New Hampshire 2020 vote.

Was hoping Christian Pulisic would shout out the Granite State truthseekers in his Champions League postgame interview. Or, at least, our real president, Donald John Trump, Sr. (Between Mickelson at the PGA last week & Pulisic this week, it's been a good week for sports MAGA. Plus, KKKlay Travis succeeding El Rushbo.)

I tend to believe the meta-analysis's conclusion that negative- or zero-effect results have been lost to publication bias, and I believe they had good quantitative justification for that, though I haven't seen those details.

Just as a hint, look at the chart Kevin gives. Those 24 results are certainly not clustered at some large value like 0.33 (that's Kevin's example of "large"). Rather, they seem to pile up within a narrow range of negative or low positive values between -0.02 and +0.10. The absence of any leftward (more negative) tail in contrast with the long rightward (more positive) tail strongly suggests some bias has operated.

That would be the reasonable conclusion to draw if the studies were conducted according to a common protocol, only drawing different samples from the same population, and applying the same analysis methodology. Then one would expect the results to be approximately Gaussian due to random error. On the other hand, there might be systematic differences between the studies that show little or no effect, and those that show real-world-meaningful effects. In that case, the distribution of PCCs would be difficult if not impossible to model.

The model might be murky, but I don't have to expect a Gaussian distribution to see that something is inconsistent about the various results, and I don't know how to pick out the underlying truth. I can't even look at them all and say the truth must be somewhere in the middle.

Something is certainly inconsistent in the results. The question is, is that because of inconsistency in methodology?

I don't know. Good points.

Meta-analyses are like grand juries. They can useful and powerful tools. Or they can indict a ham sandwich.

Thanks, Kevin. I knew you'd weigh in on this before too long, and I've been looking forward to it.

I kno meta-studies are supposed to be a gold standard, but I've found them often to be riddled with selection bias which ruins the data set.

Many end up saying the effect that some treatment isn't effective because patients are a minority of the general population and the general population isn't affected positively by the treatment. It's nuts.

It is true that null-effect results are not usually published. In this particular instance though the situation is different. Lead has never been the preferred explanation of the crime-science establishment. So every null-effect paper would likely have been published since it would support the majority position that the lead hypothesis is bonkers.

+1 broken window

Agree. As I said above, publication bias is not random across research issues.

From the June 10 issue of The New York Review of Books:

"...for the last twenty-five years America has enjoyed what sociologists call “The Great American Crime Decline.” The United States is the safest it’s been in its history." and its footnote:

"According to the 2019 annual FBI report covering more than 18,500 jurisdictions around the country, the violent crime rate fell 49 percent between 1993 and 2019. Based on data from the Bureau of Justice Statistics, which compiles survey responses from approximately 160,000 Americans on whether they were victims of crime, the rate fell 74 percent. Significantly, as the Pew Research Center noted in November 2020, public perceptions about crime in the US often don’t align with the data: “In 20 of 24 Gallup surveys conducted since 1993, at least 60% of US adults have said there is more crime nationally than there was the year before, despite the generally downward trend in national violent and property crime rates during most of that period.”

It's hard to argue that elimination of lead didn't have some effect on crime reduction.

Alas, we have a different kind of lead poisoning, a very acute form, affecting us now.

Gun violence and mass shootings are up while the rest "violent crime" is down. I haven't looked up the correlations between gun violence as a function of overall violent crime and gun availability.

As for fear of violence--that is driven by reporting. Local stations will report on mass shootings that happen across the country, and that makes people feel like things are more violent in their area.

Black people, particularly black men, commit well over 50% of the homicides in this country.

Since in real life I'm something of an applied mathematician (tons of machine learning sort of stuff), I thought I'd do a few calculations on the data.

1) the Bayesian estimate of the effect is about 0.19-.2 . (the expected value of the posterior is 0.19-0.20.) The value varies a bit depending on what significance is assigned to the error bars in the figure. The paper itself starts off down the Bayes road, but veers into frequentist weeds pretty darn quickly.

2) the estimated errors in the original papers or as normalized are far too low. Some of the estimates are 0. That's simply not feasible.

3) The estimated probability of any given paper being correct depends highly on the estimated variance of the paper. This is a side effect of point 2.

I can supply the python code if you want. I'm not thoroughly happy with how I estimated the prior, but it's not completely wrong.

after posting, i realized that there was a simple way to keep the prior distribution well bounded (It was underflowing to zero).

The expected value is 0.25

The simple way is to sum the logs and then normalize the max sum of logs to zero.

This doesn't effect any of the other things i said

Only conclusion I can reach is that there are people in Academia who don't have enough substantive work to do to keep them productively occupied.

In any case, the issue is moot — we’re not going to put lead back into gasoline, or paint, or plumbing. So any contribution of lead to increased crime is permanently declining to essentially zero.