In the previous post I said I was "prowling around" looking for something else when I happened to come across that chart about conspiracy theories. So what was I looking for?

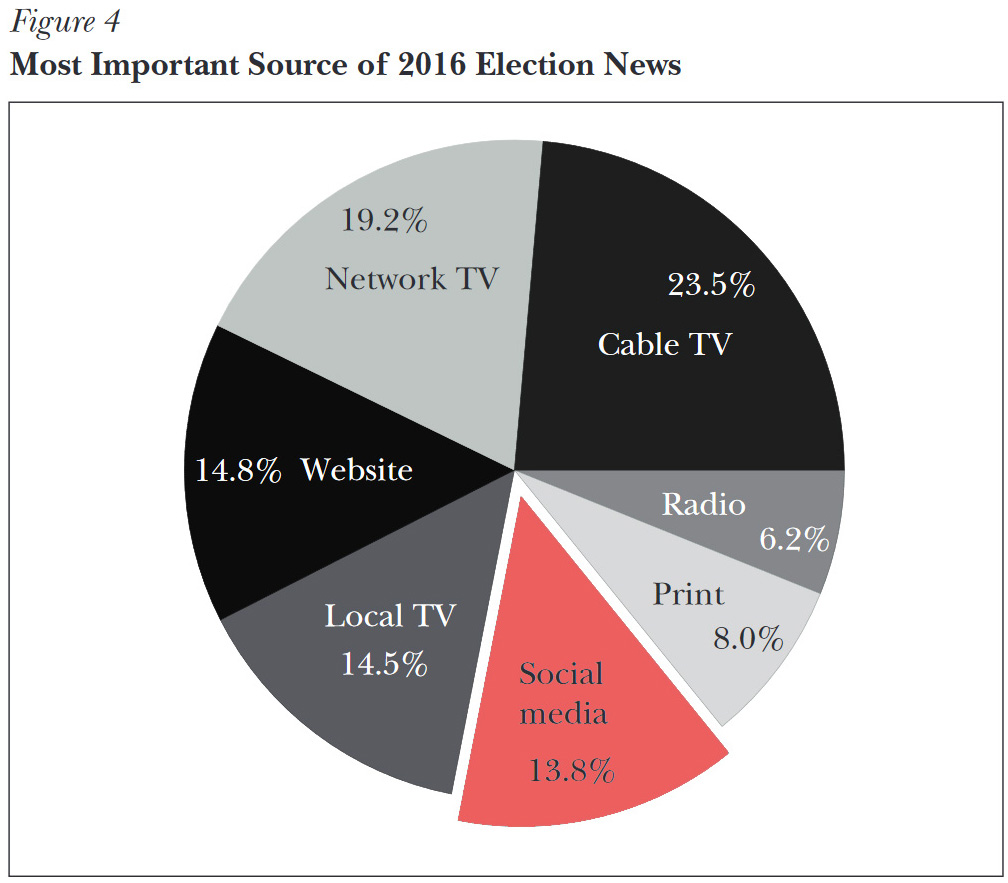

Answer: the impact of Facebook on elections. There are plenty of studies about the impact of Fox News on elections, all of them showing a fairly significant effect, but hardly any about social media. It's obviously too early to expect anything about the 2020 election, but Facebook was front and center during the 2016 election and there ought to be some research about that.

But there isn't. This is why I was interested in the paper by Hunt Allcott and Matthew Gentzkow, which looked at the impact of fake news on the 2016 presidential election. They are forced to make some fairly heroic assumptions along the way, but in the end here's what they say:

But there isn't. This is why I was interested in the paper by Hunt Allcott and Matthew Gentzkow, which looked at the impact of fake news on the 2016 presidential election. They are forced to make some fairly heroic assumptions along the way, but in the end here's what they say:

The average Fake article that we asked about in the post-election survey was shared 0.386 million times on Facebook. If the average article was seen and recalled by 1.2 percent of American adults, this gives (0.012 recalled exposure)/(0.386 million shares) ≈ 0.03 chance of a recalled exposure per million Facebook shares. Given that the Fake articles in our database had 38 million Facebook shares, this implies that the average adult saw and remembered 0.03/million × 38 million ≈ 1.14 fake news articles from our fake news database.

....As one benchmark, Spenkuch and Toniatti (2016) show that exposing voters to one additional television campaign ad changes vote shares by approximately 0.02 percentage points. This suggests that if one fake news article were about as persuasive as one TV campaign ad, the fake news in our database would have changed vote shares by an amount on the order of hundredths of a percentage point. This is much smaller than Trump’s margin of victory in the pivotal states on which the outcome depended.

I suspect that even this overstates the influence of Facebook since that "average" of 1.14 fake news articles is almost certainly skewed heavily toward pro-Trump voters. I'd guess that the average MAGA voter saw dozens of fake news articles on Facebook while everyone else saw an average of about zero. Thus, they were seen only by people who were already committed to Trump regardless.

In any case, what little evidence I've been able to piece together is consistent with the conclusions from Allcott and Gentzkow: the impact of disinformation on Facebook is quite small. Hopefully we'll get a better look at this over the next year or so as studies of the 2020 election are published.

Thus, they were seen only by people who were already committed to Trump regardless.

But doesn't that tsunami of misinformation have the effect of hardening the view of people already subject to extremist and disordered thinking?

It confirms for them what they want to hear with no ameliorating alternatives.

I don't think that's the way to think about it, Kevin. The average MAGA voter saw multiple articles confirming and reinforcing their worldview. Read, for example, _Homo Deus_ where Harari discusses the mindset of a villager raised in an environment where everyone reinforces the existence of God. Pages 147 to 149 of my copy, near the end of the "Web of Meaning" section.

Even if it is a small channel of confirmation bias, it provides an additional separate channel.

I think the impact of fake news and nonsense on social media is not so much about taking some sort of average undecided voter, showing them some fake stories and presto! someone is now a Trump voter. At least that's not what I'm concerned about.

What social media, and particularly You Tube, can cause sometimes is what you might call the "rabbit hole" effect. Where you look at one article or video or post that happens to have a slight rightward tilt. The "AI" bots then say, hey, well if you like that, how about this more rightwing content? And if you like that then how about some insane made up nonsense? Before you know it, you're in QAnon land.

I know people that this has happened to, and as I mentioned in a comment a couple of months back, I saw it happen myself when I looked at one negative You Tube video of the new Wonder Woman movie when not logged in to my gmail account and immediately You Tube was suggesting Ben Shapirio videos to me.

This is the phenomenon I'd like to see researched.

Facebook has become such a godawful mess of a site I only use it to follow a few groups that have nothing to do with politics. The idea that many people look to it as a primary news source is implausible.

The true cancer in society is Twitter, which has effectively taken over most of the space once occupied by traditional journalism.

The biggest information bomb in the 2020 cycle was Covid. Which ended up a + for Republicans as deaths still hadn't risen yet and due silly Democratic campaigning restrictions, fatigue ended up being a boon with Republicans in cities, causing Biden to under perform large and small. White women being a large disappointment.

The Democrats need social Nationalism, badly to put some testosterone into the party's mind set. Bryan to FDR would have little respect for wimpy messaging.

Yes, such a plus that Republicans lost.

Weird.

Thousands of dead Rohingya Muslims agree: facebook is of no political consequence.

Two points:

(1) Exposure to fake news is driven by self-selected political bias, so insofar that any fake news influenced the outcome, one would expect it to be small. IOW, confirmation bias is to be expected, and one does not need a 26-page paper to describe the process of confirmation bias.

(2) The key to 2016 was Facebook's targeted advertising, not fake news exposure. Facebook's targeted advertising meant that would-be Clinton voters of specific demographics in specific districts could be targeted with negative advertising of fake stories that depressed votes for Clinton. That's not something covered by this early 2017 research but we learned a year later.

Yup.

facebook in the late 2010s is a vote suppressor much as foundational facebook in 2003-04 was a woman oppressor.

Zuckerberg has issues. He's a softer, Hebraic variation on Houston Texans QB Deshaun Watson.

I don't think the influence is neccessarily driven by read-and-remembered articles as much as general perception based on an incessant drumbeat of negative headlines and snippets. Without clicking on an article, or skim-and-ignore, my perception of an otherwise unknown pol could be skewed: So Gov Newsome is a hypocritcal sleazeball, hunh. Without taking that as fact I would still approach any news about him with that now in mind.

A lot of the comments here touch on important points. Those points and one more:

1) it's not so much the articles clicked on, but the stream of memes that slowly alter what people think. The constant barrage normalizes things that used to be beyond the pale.

2) a single click can alter all of the material that shows up in your feeds -across the internet - from the google news feed on your browser homepage to facebook ads to YouTube suggestions. That one time I read an article about bitcoin haunted me for months!

3) covid led a lot of people with very few internet skills to spend a ton of time online. There are a lot of people over the age of 60 who really dont have internet evaluation skills. That's why there are so many sad "Qanon stole my mom" stories these days.

I doubt that a lot of people are even aware of where they are being influenced politically. People tend to remember the specific actions they take - turning on Hannity or reading an article in the local paper - but undervalue the things that they aren't consciously focusing on, like the 15 political memes interspersed with cute baby pictures on Facebook.

+1

It's the creeping bias that's the most insidious. Especially when 'the algorithm' changes your feed without your permission.

1.14 fake news articles is almost certainly skewed heavily toward pro-Trump voters.

But they didn't skew that way at all. We know Cambridge Analytical targeted what they called persuadeables with fake news, and it was geo targeted exactly where they needed it to be in Michigan, Wisconsin and Pennsylvania. And once on that list they continued to get the messages which is why you see so many former Obama voters in Qanon today.

No, that's because conservatives love conversion stories.

This seems to falter on the presumption that people have a clue where their biases come from, and that seems unlikely.

Pingback: How Fox News became the beating heart of the white nationalist movement - Alternet.org

Pingback: How Fox News became the beating heart of the white nationalist movement – News by Droolin' Dog

Pingback: Don't blame Facebook: How Fox News became the beating heart of the white nationalist movement -

Pingback: Don't blame Facebook: How Fox News became the beating heart of the white nationalist movement - Raw Story - Celebrating 16 Years of Independent Journalism

Pingback: Don’t blame Facebook: How Fox News became the beating heart of the white nationalist movement – News by Droolin' Dog