The latest AI chips from Nvidia will get the rock star treatment when they're unveiled next week:

Chief Executive Jensen Huang is expected to unveil his company’s latest chips on Monday in a sports arena at an event one analyst dubbed the “AI Woodstock.”

The new chips are expected to be called B100s and be available in September, UBS analysts said in a note. The could be four times faster than H100s and might cost as much as $50,000, they said, about double what analysts have estimated the earlier generation cost.

One thing I still don't quite understand is how Nvidia has managed to corner the market for AI chips. The requirements are widely understood, and there are plenty of engineers who know how to design this stuff. What's their secret sauce?

RANDOM NOTE: Donald Trump could probably post bond in his fraud case if he were able to get his hands on about 20,000 Nvidia H100 chips. Real estate might be iffy, but Nvidia chips? Gold!

It is so much that their chips are any better, but a gamble 20 years ago working out, first mover advantage plus their CUDA platform with prebundled code which makes development easier, and there are not viable competitors out there at the moment.

https://www.marketplace.org/2024/03/08/what-you-need-to-know-about-nvidia-and-the-ai-chip-arms-race/

According to this article

https://www.investors.com/news/technology/ai-chips-amd-stock-vs-nvidia-stock/

"Nvidia provides a full-stack solution for AI computing, including its CUDA software and foundational models and libraries to jump-start development of AI applications."

That stuff is proprietary, it makes nvidia the easiest choice. But AMD is the second biggest in GPUs and they can offer a good value proposition.

My guess is that with billions sloshing around nvidia can get a premium, but it won't last if AMD doesn't flub. AMD is a big company and their hardware is good.

If I bought stock I would be looking at AMD.

You know, Google's smartphone CPU, the Tensor G-series, handles some AI tasks for the Pixel phones.

ADD: Trump is toast. Start with selling the ostentatiously ugly as fuck golden toilet.

And how much power will one of these things draw?

example comment from reddit discussion on nvidia vs amd for ML

***

It is a consequence of a brilliant strategy from NVIDIA and the decisions/work some guys at Berkeley Computer Vision Group did 6-7 years ago.

It's the year 2015. Neural Networks and AI started blooming, everybody in the Academia talks about AlexNet and how it broke ImageNet competition with something called Neural Networks.

Suddenly, computer vision research teams from all around the world want their piece of the pie and the deep learning framework from the Berkeley Vision Group called "Caffe" becomes mainstream.

Most of the people in academia and research groups use Linux because reasons, and Nvidia was the only one with decent support for the Linux kernel.

This deep learning framework (Caffe) not only simplified the whole process of building Neural Networks, it had the particularity that it could be compiled with CUDA support allowing the usage of NVIDIA GPUs to greatly improve the training time of those computationally expensive models. You didn't need a supercomputer and a large budget anymore, any research team with a few thousand bucks could buy a computer with a Nvidia GPU. It was the begining of AI and supercomputing democratisation.

What did NVIDIA do? A very bald move: give free GPUs to all AI research groups around the world. All you had to do was fill in an online form and wait a couple of months to get a free Titan XP right in your mailbox.

Suddenly, everyone around the globe is building the foundation of all AI that we use today with CUDA and CuDNN dependencies... and Nvidia's tech stake becomes the de-facto standard for AI.

When AMD (and Intel too) woke up, it was already too late. The whole landscape was green.

https://www.reddit.com/r/MachineLearning/comments/qq21s6/d_why_does_amd_do_so_much_less_work_in_ai_than/

Just want to push back on "there are plenty of engineers who know how to design this stuff".

It's harder than you think. It isn't just Nvidia who can do it. I'll sign on to that.

Chip design at this scale is not a matter of months. When you see someone doing something in a matter of months, they are heavily stealing from existing designs. So the investment had to be there several years ago, and maybe not everyone made the call.

Also, managing the large teams who can get everything crank out successful silicon at these scales is not badminton. There's more than one organization that can do it, but not really more than half a dozen, maybe a dozen.

if it were easy intel would be all over it; the fact that they're not tells you something about how hard this is

Yup. Nvidia has been at this for 20 years. They did not stand up a new line and BOM last month

As others have pointed out, they made a very wise and lucky choice in investing in this stuff a while back, and it's given them a massive first mover advantage in terms of building them (to say nothing of proprietary stuff involved).

$50,000 is probably still less than what they could get for them. IIRC Zuckerberg just straight out told them that he would buy their entire year's production of the chips in advance for Meta if they were willing.

I don't think the nvidia advantage is in the silicon, I doubt it. AMG GPU hardware is competitive.

I think the main issue is a proprietary toolset that speeds implementation.

Regarding Kevin’s “Random Note” it amuses me that “billionaire” Donald Trump is having trouble raising $500 million and pop star Taylor Swift could easily do so.

Not a hardware engineer or tech guy, but I am a hardcore PC and console gamer. My layman's understanding is NVIDIA basically lucked into its AI chip dominance. What AI and MLM's really need more than anything is just simple, raw computing power (same with crypto). GPUs provide lots of computing power, because there is very little more resource-intensive than generating millions of pixels in a 3D space in motion. NVIDIA, moreover, has dominated the GPU space in gaming for years. Based on Steam hardware surveys, something like 70-80% of all gaming PCs have an NVIDIA card installed. Furthermore, NVIDIA has a proprietary graphics enhancement called DLSS. It uses AI to generate frames during gameplay at the expense of a little input lag. So a game that might normally run at only 90 fps, can run at 150+ because instead of dynamically generating frames in realtime with the GPU, the AI fills in the gaps using predictive models. As a result, NVIDIA has the manufacturing advantage, the cash advantage, and the technical advantage. They also benefited from a big crypto boom during COVID that had techbros buying scalped GPUs for several times MSRP. NVIDIA made a lot of money, saw the demand, and steered into the skid so to speak in recent years.

Remember Saddam's supercomputer made of Playstation 2's? Pretty sure that specific story was debunked. But the raw horsepower of gaming chips is no joke. This Reddit thread (https://www.reddit.com/r/AskHistorians/comments/l3hp2i/comment/gkgqcwv/?utm_source=share&utm_medium=web2x&context=3) lists a whole host of articles documenting how cheap and effective gaming devices linked together can be.

Saddam Hussein built a supercomputer? The way I heard it, it was one of the local nuclear labs, either Sandia or Los Alamos, that built a supercomputer out of playstation chips. It was a big deal on the local news.

The secret sauce is that Nvidia went with the right industry standard and they've been learning how to make better and better chips for decades now. The interface thing was just luck and something that could be remedied in a few years if they had chosen wrong. Building the team and knowledge base is another story. Designing chips is hard even with modern chip design software. There are all sorts of pipelines, caches, interlocks and power issues at play, and figuring out how to get it right can take years. Simulations can get you pretty far, but until you start prototyping with actual silicon, it's just informed guesswork.

P.S. I wouldn't bet on Nvidia. As others have noted, there are other players. Right now, Nvidia can command a big premium, but a competitor could catch up in three to five years given that there is so much money involved. If someone comes up with an actual application for LLMs aside from operating scams, expect even more money and more competition.

The most common platform for ML is PyTorch, which now fully supports AMD's ROCm toolchain. Not much ML work is done directly in CUDA (or ROCm), so Nvidia's platform advantage is slipping away. It's shaping up to be more of a horserace over the next year or two. . .

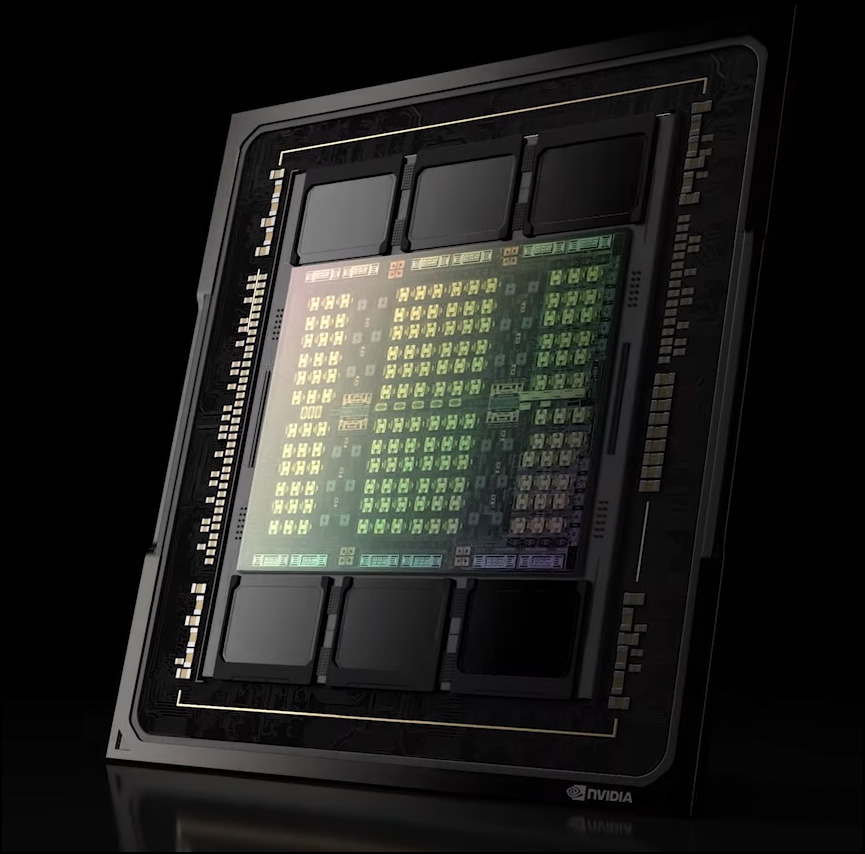

Yeah--not sure I'd refer to this as "a chip"....

https://www.techpowerup.com/gpu-specs/h100-sxm5-80-gb.c3900

Multi-core chips have been around for a while--but almost 17K cores--and that's for the current version, not the not the new one. If you'd spend a $100 for an 8 core chip, then $30K for 2000+ times that number of cores is a bargain.

😉

Their secret sauce is they got there first. Nvidia chips have long been used for purposes having nothing to do with games (see CUDA and box of GPUs supercomputers). No one else has bothered with this until recently.

"...The requirements are widely understood, and there are plenty of engineers who know how to design this stuff. What's their secret sauce?"

It's not just whether other companies can do it, it is how fast they can do it. If Nvidia can get their chips to market even just a few months sooner they have a tremendous advantage. As long as Nvidia can keep rapidly improving their chips they will be hard to catch.

"What's their secret sauce?"

Their first secret sauce is an extremely complete software stack.

Ever since they gambled big on programmable GPUs rather than fixed function HW, they have been growing and growing the selection of SW they offer.

You can see something of the range by looking at

https://www.nvidia.com/en-us/geforce/geforce-experience/

going up to the menu and choosing Products/Software or Solutions.

Couple that with close working with academia and it's a no-brainer that you might as well buy an nVidia product and use their software, rather than having your $120K/annum PhD spend a year writing kinda equivalent software, that doesn't run as well, on someone else's hardware.

Their second secret sauce is that they're still a founder-run company. The point is, they COULD behave like Intel and go to sleep for ten years, or just decide that the current pace is too hard. But Jensen has ambitions, and he hires people with the same level of ambitions. nVidia is not run by finance (they make money, but the GOAL is the product, not the money) and nVidia is not run by marketing (changes are made based on actual genuine value, not on "will make marketing/benchmarks look good"). They are run by engineers to perform consistently great engineering.

The only other company run this way that I can think of is Wolfram.

nVidia is also extremely nimble.

The only equivalent is Apple, and while Apple is just as nimble, the matched speeds mean that Apple is always lagging a few years behind. (Along with, of course, they are optimizing for different things. Apple GPU/AI HW will look less impressive by nVidia metrics, nVidia less impressive by Apple metrics.)