The Wall Street Journal reports today that Instagram "helps connect and promote" a huge network of pedophile sites:

Pedophiles have long used the internet, but unlike the forums and file-transfer services that cater to people who have interest in illicit content, Instagram doesn’t merely host these activities. Its algorithms promote them. Instagram connects pedophiles and guides them to content sellers via recommendation systems that excel at linking those who share niche interests, the Journal and the academic researchers found.

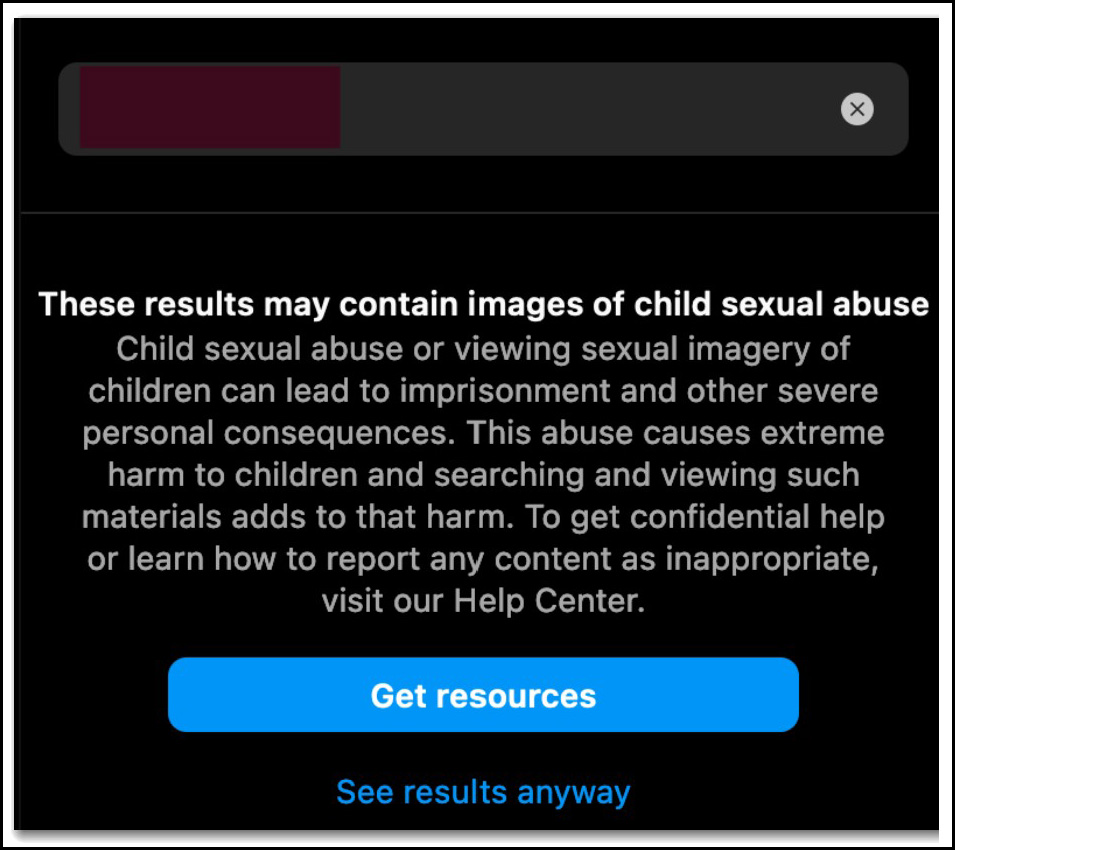

A related report from Stanford's Internet Observatory notes that searches on Instagram for pedophile content sometimes throw up a warning—but, oddly, include an option to "see results anyway":

It's one thing to host content inadvertently. No social media platform can monitor and control 100% of its content. But the Achilles heel of these platforms—for illicit content but also more generally—is their recommendation algorithms, which have the capacity to actively promote and connect users to dangerous or addictive material. Instagram is especially culpable in this case since the Internet Observatory report notes that TikTok doesn't have a similar pedophilia problem. "TikTok appears to have stricter and more rapid content enforcement," the report says, and if TikTok can do it then so can Instagram—if they bother trying.

It's one thing to host content inadvertently. No social media platform can monitor and control 100% of its content. But the Achilles heel of these platforms—for illicit content but also more generally—is their recommendation algorithms, which have the capacity to actively promote and connect users to dangerous or addictive material. Instagram is especially culpable in this case since the Internet Observatory report notes that TikTok doesn't have a similar pedophilia problem. "TikTok appears to have stricter and more rapid content enforcement," the report says, and if TikTok can do it then so can Instagram—if they bother trying.