Tyler Cowen weighs in today on a study of the lead-crime hypothesis:

These results seem a bit underwhelming, and furthermore there seems to be publication bias....I have long been agnostic about the lead-crime hypothesis, simply because I never had the time to look into it, rather than for any particular substantive reason. (I suppose I did have some worries that the time series and cross-national estimates seemed strongly at variance.) I can report that my belief in it is weakening…

Hmmm. I suppose that a quick look at the abstract of one paper might very well weaken your belief in something if it's the only thing you've ever looked at. Unfortunately, even mild pronouncements from Tyler tend to carry a lot of weight, so I suppose I should comment on this even though I'm sort of tired right now and don't really feel like it.

But let's do it anyway! I shall sprinkle exclamation points throughout this post in order to simulate energy and enthusiasm. But I'm afraid it's going to be kind of long and boring anyway. That's just the nature of these things. If you want to read along, the study is here.

First, though, just to get this out of the way: I don't know what Tyler means when he says "the time series and cross-national estimates" are at variance. I've looked at both and they seem to agree fine. Time series estimates tend to show that crime goes up and down based on lead levels in the past (i.e., during childhood), while cross national estimates tend to show that the peaks and troughs of crime line up with the rise and fall of leaded gasoline, which happened at different times in different countries. I'm not sure what the variance between these two types of studies is supposed to be.

But let's move on. I wrote about the study at hand a couple of years ago, and you can read my initial thoughts here. There are a few things to note:

- It's a meta-study, which means it tries to average out the results of all the primary studies on lead over the past couple of decades.

- It concludes that there's publication bias in the published studies. This is probably true, since I suspect there's publication bias in every field of study. To put it in its simplest terms, publication bias is the tendency for big, exciting results to get published while small, boring results never get written up in the first place—and if they do, they tend not to get published. This skews the public evidence in favor of positive findings.

- It includes several other results, too, some of which I have a hard time reconciling. The main one, of course, is the conclusion that the effect of lead on the crime decline of the '90s is fairly smallish.

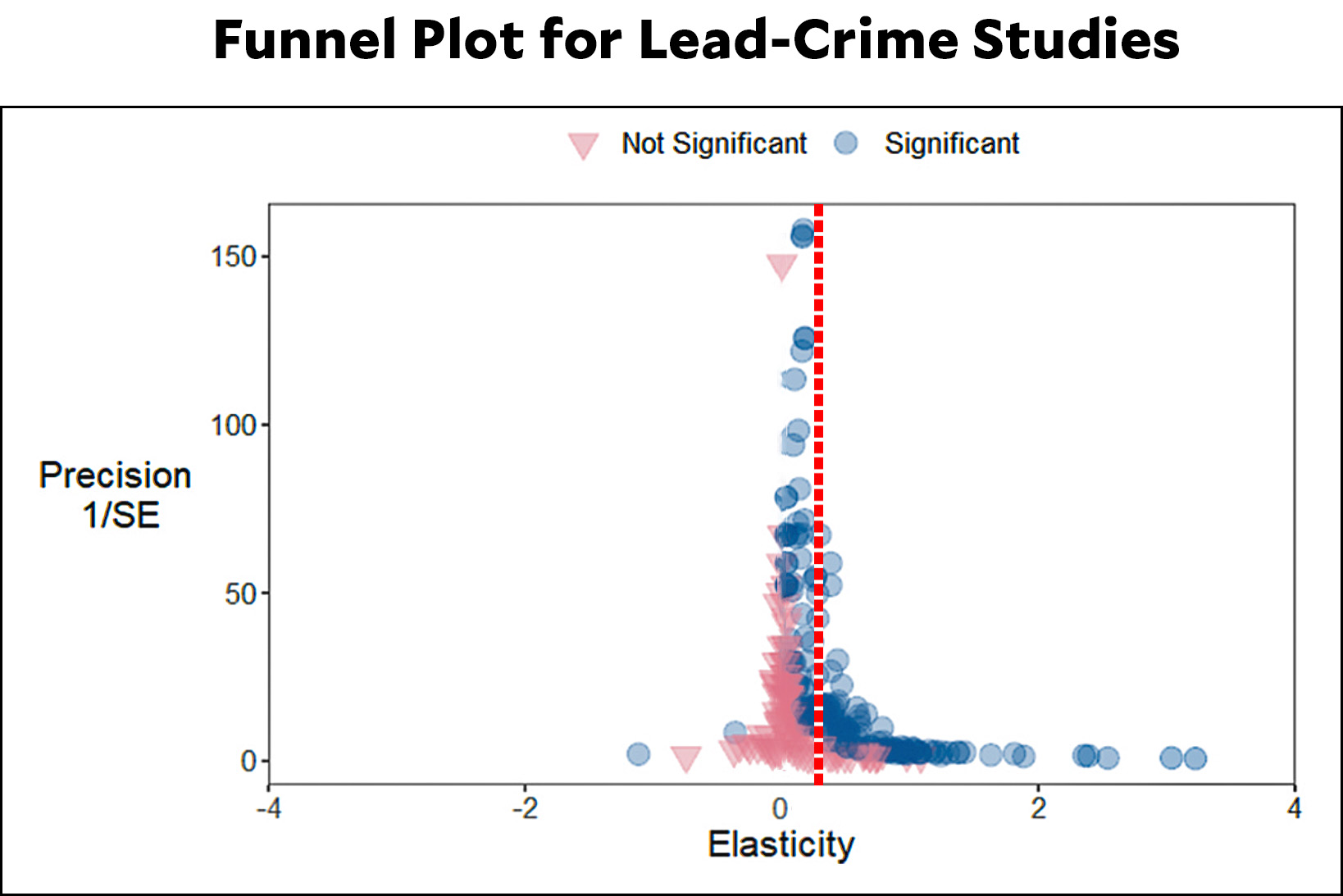

Let's start with publication bias. The authors provide two different "funnel plots" that estimate publication bias. One uses partial correlations while the other uses elasticities:

The elasticities measure the percent change in some measure of crime, given a percent change in some measure of lead pollution. They provide a better measure of the real effect rather than the measure of statistical strength the PCCs provide.

Fine. Here's the funnel plot using elasticities:

I've modified this plot to place a line roughly in the center of the data. If there were no publication bias at all the data would be symmetrical around this line. It's not. But it's also not that far off. This and other measurements suggest that publication bias is present. At the same time, this, along with other considerations, suggests the publication bias is not huge.¹

I've modified this plot to place a line roughly in the center of the data. If there were no publication bias at all the data would be symmetrical around this line. It's not. But it's also not that far off. This and other measurements suggest that publication bias is present. At the same time, this, along with other considerations, suggests the publication bias is not huge.¹

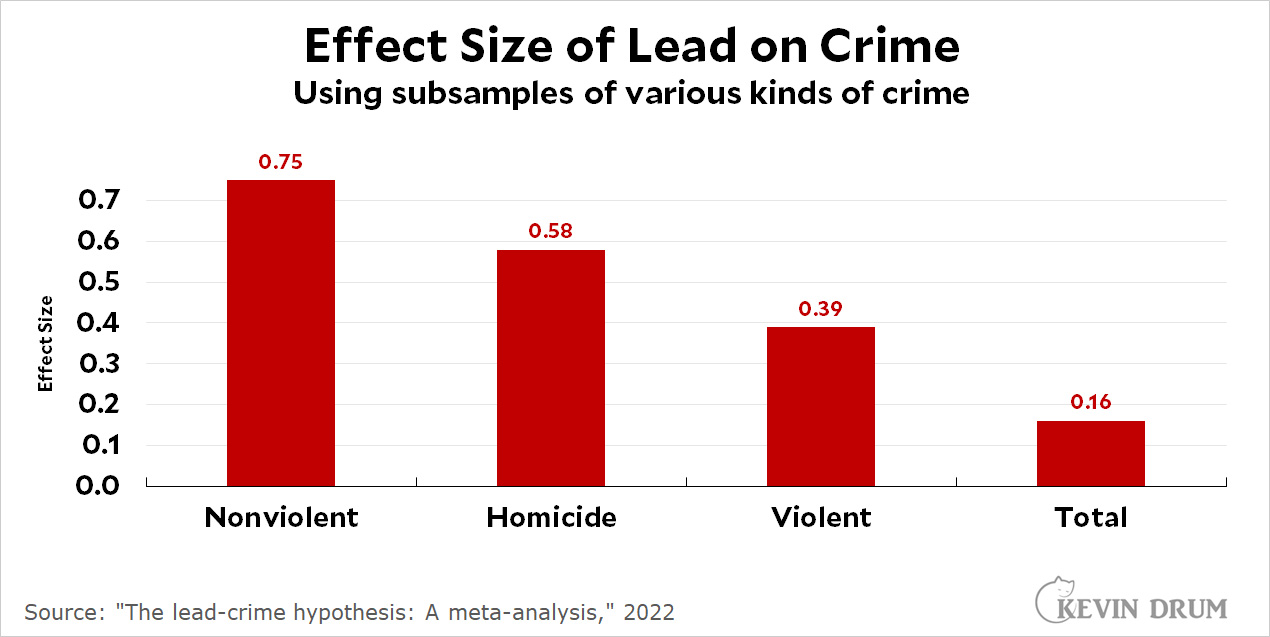

Next up is the measured effect size of lead on crime. The authors provide estimates for various subsamples, shown here:

I have a feeling I'm misinterpreting this data somehow. Everyone in the field agrees that the effect of lead is greater on violent crime than on nonviolent crime, so why are they reversed here? This makes no sense.

I have a feeling I'm misinterpreting this data somehow. Everyone in the field agrees that the effect of lead is greater on violent crime than on nonviolent crime, so why are they reversed here? This makes no sense.

I also have an objection to the authors' idea of what an "ideal" study would encompass:

The ideal specification we use is one that includes controls for race, education, income and gender, that uses individual data, directly measured lead levels, controls for endogeneity, uses panel data, is estimated without just using simple OLS or ML, uses total crime as the dependent variable....

The ideal study should focus on violent crime, not all crime. Nor should it focus very much on homicide, which many people think is the most accurate measure of violent crime.² It's not. The problem with homicide is that it has small sample sizes and produces a lot of variation, especially over small time periods. The best measure is violent crime over a long time period.

And that brings up a third point: Unless I've missed something, this paper looks only at the association of lead and crime beginning around 1990 when crime began to decline. But that's only half the data. Why do they ignore the rise of crime in '60s and '70s? That's a big piece of the evidence in favor of the lead-crime hypothesis.

And there's more! As the authors point out, most lead-crime studies are ecological. That is, they compare one area with another (different states, for example) and look to see if one thing—average lead exposure—is correlated with another thing—average crime. These studies are useful but tricky, with lots of pitfalls that can produce spurious results because they compare only averages to other averages.

The gold standard for lead-crime research is a study that compares individuals over time. One type of individual study might measure, say, bone lead levels in a random group of adults and use it to estimate childhood levels, which it then compares to present-day crime records for each person. Another type is a prospective study, where you choose a random group of kids, measure their lead exposure levels, and then look at them 20 years later to see if individual lead levels correspond to individual crime rates. The problem here is obvious: you can only do this if someone collected the childhood data 20 or 30 years ago. This didn't happen very often, and I'm aware of only a few examples:

- Cecil, University of Cincinnati Lead Study

- Sampson, Project on Human Development in Chicago Neighborhoods

- Beckley, Dunedin Multidisciplinary Health and Development Study (New Zealand)

- Liu, 4 preschools in Jintan, Jiangsu province of China

- Needleman, Juvenile Court of Allegheny County

Two of these aren't even included in the meta-study, probably because they didn't fit the authors' fairly stringent requirements. However, all of them show roughly expected levels of increased crime rates in adults who were lead-poisoned as children.

TO SUMMARIZE: I'm not a statistical guru and I don't promise that I have everything right here. Nor should this post be construed as any kind of criticism of the authors. Meta-studies are good things; publication bias is real; and their methodology seems reasonable.

That said, a few things seem a little out of whack. It would be nice if the lead-crime community could take a look and formulate a response of some kind.

¹As I've written before, it's useful to consider how likely publication bias is in any particular area. Are you looking at studies of antidepressants? Pharma companies would love to bury null results, so watch out for publication bias! Are you looking at quick-and-dirty studies that use classrooms of undergrads as their subjects? Those often aren't even worth writing up if the results are uninteresting, so watch out for publication bias!

Lead-crime studies, by contrast, are moderately complicated and take a while to finish. That's a chunk of your career, and you probably don't want to throw one out just because it wasn't super exciting. There are also no big motivations to ignore studies that are inconvenient. Bottom line: this is not a field that seems likely to produce a huge amount of publication bias.

²Homicide has the advantage of being easy to estimate: someone is either dead or they aren't. Other types of violent crime require a certain amount of human judgment to categorize them properly.

Lead in the waffles?

https://nypost.com/2022/12/29/wonder-woman-lynda-carter-says-i-trained-at-waffle-house-after-viral-brawl-video/

So I’m guessing the theory is that lead exposure make people prone to fits of rage… Like the pathetic women in this video.

My buddy's mother makes $50 per hour working on the computer (Personal Computer). She hasn’t had a job for a long, yet this month she earned $11,500 by working just on her computer for 9 hours every day.

Read this article for more details.. https://payathome.blogspot.com/

The Tyler Cowen who said crypto would surely succeed because so many smart people were working on it?…

Yes I know that logic is unfair…

The Tyler Cowen that's a pilonidal cyst [h/t Rush Limbaugh] on the backside of the body politic? That Tyler Cowen? He's a troll, using his credible econ research as beard to let him troll everywhere else.

A moral cretin.

Well, as has been discussed before in these parts, Cowen seems to me to be an interesting thinker and he runs an interesting podcast with interesting guests, but I was surprised a couple of months ago on the podcast when he said crypto would surely be a big hit because a lot of smart people were working on it. Talk about the snobbery of the educational elites! Anyway, the reality is if you are an interested layman (like me, that is) you don't have time to judge academic studies so you make some overall judgements about who you think is reliable and worth your time. I realize his opinion about crypto has nothing to do with his ability to judge this meta study, but I will say his opinion on crypto changed my opinion on him from curious semi-fan to extra skeptical of whatever he says.

And anyway, isn't it also true that the lead-crime theory is supported by some pretty smart people? I'm wondering why the same standard applied to crypto is not applied to the lead-crime theory... And my conclusion is that Prof. Cowen likes to think the people on his side of the political fence are extra "smart" and therefore their ideas "work" or something similar.

Anyway, bah humbug. One less podcast I have to pay attention to...

Glibertarians are glib.

My take is that many liberals are super-duper eager to prove they aren't in a bubble. Cowen is a "tolerable" conservative (one of X, X<10) so many liberals rush to love on him.

In other curious medical news,

hydroxychloroquine may act against Alzheimer's,

https://pubmed.ncbi.nlm.nih.gov/36577843/

which may suggest why so many wingnuts who took it felt it helped them.

As I've written before, it's useful to consider how likely publication bias is in any particular area.

Agreed. Also, at this point the lead-crime hypothesis is widely known, and has been for a long time. I'd expect publication bias to be a weakish factor in this one area, for the simple reason that silver-bullet research results forcefully and convincingly disproving the hypothesis would be quite a feather in the cap of any researcher.

Thank you for the follow up, Kevin.

PS: Is homicide really associated with such small sample sizes? In the US about 15k-20k people are murdered every year. That seems like plenty! Also, "violent crime" as a datapoint doesn't just suffer from the "human judgment" problem. There's also: changing laws/definitions (what might not have been categorized as a violent crime 20 years ago now is); cross-jurisdictional definitional variances (a bar fight merits a police warning in one jurisdiction and felony assault charges in another); law enforcement resource issues (violent crime may go unreported in a jurisdiction with police staffing issues, or over-reported in a jurisdiction with ample staff, especially if they've got "numbers to make"). I'll take your word for it since you've done a lot of study in this area, but "violent crime" data just feels a lot less clean to me than murder stats (although long term, the latter datapoint is obviously impacted by improving medicine).

Agree. But, I think there are even worse problems in reporting of violent crime: police motivations in some jurisdictions to juke the stats for political purposes. It works something like this:

1. Politicians (say, mayoral candidates) run on a promise to reduce crime.

2. Elected mayor appoints new police leadership with orders to "make things happen".

3. Good police work can only go so far. Eventually, continued improvement depends on manipulating reports.

4. Citizens can be persuaded/intimidated to refrain from reporting, or to report less serious crimes.

5. Police can determine that evidence isn't sufficient to record a criminal incident.

For a depressing account of how this worked in Baltimore, see this:

https://www.themarshallproject.org/2015/04/29/david-simon-on-baltimore-s-anguish?utm_medium=social&utm_campaign=share-tools&utm_source=facebook&utm_content=post-top

A different take on how it worked in New York:

https://www.villagevoice.com/2012/03/07/the-nypd-tapes-confirmed/

Homicides are hard to hide because you have to account for the bodies. Assaults can be buried by non-reporting, or downgraded to something less. I fear that a lot of the crime reduction reported over the last 10 or 15 years is due to deliberate manipulation of statistics.

The Baltimore situation is incredible. I'd highly recommend HBO's "We Own This City" if you haven't seen it.

I don't agree with you completely but I remain baffled why anyone listens to Tyler Cowen. Whenever I bother checking him out at random he is always saying something stupid.

The more I read about meta-studies the more underwhelm I get. I suppose there is a reason for them though I’m not we have the ability to do them well, yet. Meta-studies suffer from the same biases as any study and include inscrutable decisions. They almost sound like the ultimate “both sides” analyses. More to the point, they actually don’t include any new research, they are basically look what’s our there. I can image it would be easy to create a study to have whatever result one wants, there are just too many variables. When I read into their analysis, I find them remarkably unfounded. I think it is best to go the original research and look to the data. There is no doubts that some studies are stronger than others but meta-studies tend to minimize the differences between the studies. I would like to see how well they work as an analytical tool by looking at published results the last 20 years and see how the hold up. Currently, meta-studies seem to be concocted by current statistical modeling research as a make work.

How very meta...a meta study of meta studies.

😉

The logical question is why do they need to do a meta analysis on publicly available data?

Jessica Gurevitch does a great review of the pitfalls of meta analyses in this article.

Meta-analysis and the science of research synthesis

Nature

nature25753

Dude, there's high lead and cadmium in chocolate.

https://www.consumerreports.org/health/food-safety/lead-and-cadmium-in-dark-chocolate-a8480295550/

NOOOOOOO!

But dark and ultradark chocolates are the worst. Great news! Or rather, it could be worse. Thanks for the link!

Kevin, how does "AnnieDunkin" continue to evade your filters? Every bloody post; it must be even more annoying for you than for us readers.

I second that emotion.

I am only casually knowledgeable about crime and lead's potential impacts. However, the studies on the impacts of lead are heavily US centric. Would not the more lead, equal more crime relationship be a global finding?

I saw a study of Hanshin region (very industrial part of Japan that includes Osaka and Kobe): they found basically zero statistical relationship between crime and lead. Japan has very low crime so, if anything, the change would be stick out. Similarly, Zurich (most industrial part of Switzerland) does not show this relationship.

While lead might be a factor, there must be something more complicated going on, else global findings would be similar.

It is a global finding. In fact, one of the strong points of the lead-crime hypothesis is that the phenomenon of "violent crime tracks the introduction and phase-out of leaded gasoline, lagged by ~20 years" shows up in many different countries.

The other thing to keep in mind is that the lead-crime hypothesis was conceived to explain the huge crime wave that swept the U.S. in the '80s and '90s. It's a *historical* hypothesis about a time when lead exposure was far, far higher than it is today; it does not claim to explain much about modern crime rates. If the hypothesis is true, there would still be an effect, but so small that other factors would overwhelm it.

(You might then ask, what's the use of the hypothesis if it can't tell us anything about today? Partly it's about understanding history; partly it's about debunking a lot of pet theories that came out of the fall in crime from the '90s onward.)

Dr. Kim Dietrich at the University of Cincinnati Medical Center has done the best work. Particularly his "Childhood lead poisoning and adult violence: Cincinnati Study" at:

http://journals.plos.org/plosmedicine/article?id=10.1371/journal.pmed.0050101

“Prenatal and postnatal blood lead concentrations are associated with higher rates of total arrests and/or arrests for offenses involving violence. This is the first prospective study to demonstrate an association between developmental exposure to lead and adult criminal behavior.” (2008)

He also showed a physical brain change "Decreased Brain Volume In Adults With Childhood Lead Exposure" which showed:

“Childhood lead exposure is associated with region-specific reductions in adult gray matter volume. Affected regions include the portions of the prefrontal cortex and ACC responsible for executive functions, mood regulation, and decision-making. These neuroanatomical findings were more pronounced for males, suggesting that lead-related atrophic changes have a disparate impact across sexes.” (2008)

Pingback: More on the lead-crime connection | Later On

Pingback: Lead and violence: all the evidence - Marginal REVOLUTION

Pingback: Lead and violence: all the evidence – IMT Fair

Pingback: Lead and violence: all the evidence

Pingback: Lead and violence: all the evidence - AlltopCash.com

Pingback: Lead and violence: all the evidence - FileHog.com

I have been fascinated by the lead hypothesis for some time.

One particular aspect of the lead-crime connection that I have found especially interesting is how different categories of crime appear to be affected. The most dramatic declines in the US white juvenile population have occurred in non-violent crimes (property crimes, alcohol crimes, and civil disorder). These criminal categories have largely evaporated. With some rates of property crimes declining by over 90%. It already seems inevitable that these crimes over even the medium term could essentially vanish. 50 years ago youth crime was mostly property crime (vs violent crime) by a factor of ~12. Current ratio is ~3 and falling.

Violent crime, drug crime and weapon offenses have been much more resistant

to extreme decline. These crimes have declined by ~50% over the last few decades.

How does this fit into the lead crime hypothesis? I will now get all hand wavy and make up just so after the fact stories with pretty words to explain this within the context of lead. I think what is involved here is different cognitive processes.

With property crime such as burglary there is a great deal more planning involved. Committing burglary is much closer to 3D chess than would be a typical violent crime. This would not always be true, though as a general rule of thumb, one would expect a higher cognitive input for many property crimes. Property crime would also exist within the framework of chronic self-apparent life disadvantage. These features suggest that with appropriate social interventions, economic growth etc. and also with reduced lead one might see profound decreases in such property crime. That is exactly what has happened. Burglary rates in US white juveniles have fallen by ~93% since 1980 and are continuing with this rapid decline.

With violent crime the after the fact story is different. Aggravated assault has fallen by only 40% over the last number of decades. Why the difference? Why have most non-violent crimes almost disappeared while violent crimes remain stubbornly relatively high? Violent crime can be extremely impulsive. People with lead damaged brains have minimal executive functioning. The 5% of the population who commit 50% of the violent crime are much more difficult to influence than the property criminals. One is trying to stop a flash of anger which can be triggered by almost any stray remark or glance. Even without lead in the environment, it would seem that these more spontaneous type outbursts would be more difficult to suppress.